Nesevo Virtual Labs

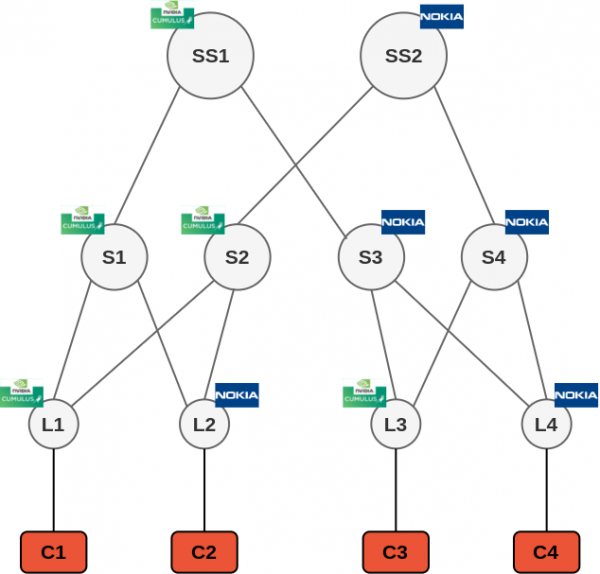

Nokia/Cumulus Multi-Vendor Lab

The targets of this lab are to :

- mix Nokia SR-Linux and Cumulus VXLAN nodes, in spine or leaf roles

- make this environment work for different kinds of setup : cross-DC L2, differentiated L3 gateways per leaf per VRF, anycast gateway cross or intra DC, etc.

- spot compatibility issues if existing

To reach those goals, we'll use the Nokia lab tool "containerlab" https://containerlab.srlinux.dev/, labs from https://clabs.netdevops.me/ helped a lot. Many thanks to the contributors.

We'll need a Linux host with Docker installed on it + kvm virtualization enhancements activated (important if your host is a VM). If virtualization optimization is not ok, you won't be able to launch the most of devices.

Installation details are referenced there : https://containerlab.srlinux.dev/install/

Once installed, you'll need to register the different NOS images you'll use. Cumulus image is present on a public registrery, but Nokia/Arista/Juniper are not, you'll need a vendor support account to retreive them. Once downloaded (.tgz file typically) you can "docker load" the image :

For private images :

sudo docker load -i 21.6.1-235.tar.xz /* (Nokia) */

sudo docker load -i cEOS64-lab-4.26.1F.tar /* (Arista) */

For public images :

sudo docker pull networkop/cx:4.3.0 /* (Cumulus) */

To verify your installed images, you can run "sudo docker images"

plancastre@containerlab:~/00.LAB/senss$ sudo docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

networkop/cx 4.3.0 a40541ed15ba 2 weeks ago 733MB

srlinux 21.6.1-235 e29aed30e83f 3 weeks ago 1.14GB

networkop/ignite dev dcc5eff29355 4 weeks ago 37.9MB

networkop/kernel 4.19 e794d8875f83 3 months ago 88MB

frrouting/frr v7.5.1 c3e13a4c5918 3 months ago 123MB

Once you've got all your images, to run the lab, you'll need a .yml file defining the topology to run.

In our lab, we've started with the 5-clos lab file from Nokia site, which we've forked.

We can see there is a branch "kinds" where we can define templates of nodes, in order to not rewrite whole parameters when creating nodes. For the images, it's important to take the repository name + tag.

Note: If the tag is "latest" it is not needed.

All supported "kinds" are referenced there : https://containerlab.srlinux.dev/manual/kinds/kinds/

Note: yml is indentation sensitive, so please respect the "double space" used to define hierarchy. Below the lab diagram + the yml file associated

Infos on nodes

L3 infos

| Device | OS | ASN | VTEP IP | swp1 or eth1/1 | swp2 or eth1/2 | swp3 or eth1/3 | SVI |

|---|---|---|---|---|---|---|---|

| client1 | Linux | <none> | <none> | vlan100: 192.168.1.1/24

vlan200: 192.168.2.1/24 route 192.168.200.0/24 via 192.168.2.254 |

<none> | <none> | <none> |

| client2 | Linux | <none> | <none> | vlan100: 192.168.1.2/24

vlan200: 192.168.2.2/24 route 192.168.200.0/24 via 192.168.2.254 |

<none> | <none> | <none> |

| client3 | Linux | <none> | <none> | vlan100: 192.168.1.3/24

vlan200: 192.168.200.1/24 route 192.168.2.0/24 via 192.168.200.254 |

<none> | <none> | <none> |

| client4 | Linux | <none> | <none> | vlan100: 192.168.1.3/24

vlan200: 192.168.200.2/24 route 192.168.2.0/24 via 192.168.200.254 |

<none> | <none> | <none> |

| leaf1 | CVX | 65011 | 10.255.255.5/32 | 10.254.254.8/31 | 10.254.254.10/31 | trunk vlan 100,200 | vlan200 : 192.168.2.254/24 |

| leaf2 | SRLinux | 65012 | 10.255.255.6/32 | 10.254.254.12/31 | 10.254.254.14/31 | trunk vlan 100,200 | irb0.200 : 192.168.2.254/24 |

| leaf3 | CVX | 65013 | 10.255.255.7/32 | 10.254.254.22/31 | 10.254.254.24/31 | trunk vlan 100,200 | vlan200 : 192.168.200.254/24 |

| leaf4 | SRLinux | 65014 | 10.255.255.8/32 | 10.254.254.26/31 | 10.254.254.28/31 | trunk vlan 100,200 | irb0.200 : 192.168.200.254/24 |

| spine1 | CVX | 65001 | 10.255.255.1/32 | 10.254.254.9/31 | 10.254.254.13/31 | 10.254.254.0/31 | <none> |

| spine2 | CVX | 65001 | 10.255.255.2/32 | 10.254.254.11/31 | 10.254.254.15/31 | 10.254.254.16/31 | <none> |

| spine3 | SRLinux | 65002 | 10.255.255.3/32 | 10.254.254.23/31 | 10.254.254.27/31 | 10.254.254.18/31 | <none> |

| spine4 | SRLinux | 65002 | 10.255.255.4/32 | 10.254.254.25/31 | 10.254.254.29/31 | 10.254.254.20/31 | <none> |

| superspine1 | CVX | 65003 | 10.255.255.9/32 | 10.254.254.1/31 | 10.254.254.19/31 | <none> | <none> |

| superspine2 | SRLinux | 65003 | 10.255.255.10/32 | 10.254.254.17/31 | 10.254.254.21/31 | <none> | <none> |

VXLAN/BGP infos

Note : in our lab, route-distinguisher is constructed on the model <loopback_ip>:<vlan_id>

| service | vlan id | route-target | VNI | Commentaires |

|---|---|---|---|---|

| L2 cross-DC | 100 | target:65000:100 | 100 | the 4 clients are in the same broadcast domain / subnet |

| L3VPN cros-DC | 200 | target:65000:200

target:65000:10200 |

200 (for L2)

10200 (for routing) |

the 4 clients are separated in 2 different subnets. Traffic is routed within dedicated VNI

To be noted that in the current setup VMTO is not implemented, so cross DC traffic is not optimized. |

Lab diagram

yml lab file

# topology documentation: http://containerlab.srlinux.dev/lab-examples/min-5clos/

name: lab-srl-cum

topology:

kinds:

srl:

image: srlinux:21.6.1-235

linux:

image: ghcr.io/hellt/network-multitool

cvx:

image: networkop/cx:4.3.0

runtime: docker

nodes:

leaf1:

kind: cvx

leaf2:

kind: srl

type: ixrd2

leaf3:

kind: cvx

leaf4:

kind: srl

type: ixrd2

spine1:

kind: cvx

spine2:

kind: cvx

spine3:

kind: srl

type: ixr6

spine4:

kind: srl

type: ixr6

superspine1:

kind: cvx

superspine2:

kind: srl

type: ixr6

client1:

kind: linux

client2:

kind: linux

client3:

kind: linux

client4:

kind: linux

links:

# leaf to spine links POD1

- endpoints: ["leaf1:swp1", "spine1:swp1"]

- endpoints: ["leaf1:swp2", "spine2:swp1"]

- endpoints: ["leaf2:e1-1", "spine1:swp2"]

- endpoints: ["leaf2:e1-2", "spine2:swp2"]

# spine to superspine links POD1

- endpoints: ["spine1:swp3", "superspine1:swp1"]

- endpoints: ["spine2:swp3", "superspine2:e1-1"]

# leaf to spine links POD2

- endpoints: ["leaf3:swp1", "spine3:e1-1"]

- endpoints: ["leaf3:swp2", "spine4:e1-1"]

- endpoints: ["leaf4:e1-1", "spine3:e1-2"]

- endpoints: ["leaf4:e1-2", "spine4:e1-2"]

# spine to superspine links POD2

- endpoints: ["spine3:e1-3", "superspine1:swp2"]

- endpoints: ["spine4:e1-3", "superspine2:e1-2"]

# client connection links

- endpoints: ["client1:eth1", "leaf1:swp3"]

- endpoints: ["client2:eth1", "leaf2:e1-3"]

- endpoints: ["client3:eth1", "leaf3:swp3"]

- endpoints: ["client4:eth1", "leaf4:e1-3"]

Once the nodes are defined, we have to define the needed connections between the nodes.

Each endpoint is defined as ''<node_name>:<interface_name>''

When all is ok, you can launch in suduoer the topology.

For example :

sudo containerlab deploy --topo topo.clab.yml --reconfigure

Note: The option "reconfigure" is used there in order to not have to destroy instances when updating topology/relaunching.

Another info regarding the yml file, it's possible to quickly generate files with the "containerlab gen -n 3tier --nodes 4,2,1" command for example. https://containerlab.srlinux.dev/cmd/generate/

To be noted that the "tier-x" notion in containerlab is inversed regarding the rfc 7938 (tier1 is superspine level into the rfc, tier1 is leaf level in nokia tool)

At the end of the topology launch, you can retreive the details :

INFO[0011] Writing /etc/hosts file

+----+------------------------------+--------------+---------------------------------+-------+-------+---------+-----------------+----------------------+

| # | Name | Container ID | Image | Kind | Group | State | IPv4 Address | IPv6 Address |

+----+------------------------------+--------------+---------------------------------+-------+-------+---------+-----------------+----------------------+

| 1 | clab-lab-srl-cum-client1 | 25e2bbf6b13f | ghcr.io/hellt/network-multitool | linux | | running | 172.20.20.2/24 | 2001:172:20:20::2/64 |

| 2 | clab-lab-srl-cum-client2 | 17d82d5a6e21 | ghcr.io/hellt/network-multitool | linux | | running | 172.20.20.4/24 | 2001:172:20:20::4/64 |

| 3 | clab-lab-srl-cum-client3 | f8d78527c1b1 | ghcr.io/hellt/network-multitool | linux | | running | 172.20.20.6/24 | 2001:172:20:20::6/64 |

| 4 | clab-lab-srl-cum-client4 | 913a0172d0c5 | ghcr.io/hellt/network-multitool | linux | | running | 172.20.20.10/24 | 2001:172:20:20::a/64 |

| 5 | clab-lab-srl-cum-leaf1 | 9ffc334af2b1 | networkop/cx:4.3.0 | cvx | | running | 172.20.20.9/24 | 2001:172:20:20::9/64 |

| 6 | clab-lab-srl-cum-leaf2 | 7d3eb855d749 | srlinux:21.6.1-235 | srl | | running | 172.20.20.14/24 | 2001:172:20:20::e/64 |

| 7 | clab-lab-srl-cum-leaf3 | e0d36ffc606a | networkop/cx:4.3.0 | cvx | | running | 172.20.20.7/24 | 2001:172:20:20::7/64 |

| 8 | clab-lab-srl-cum-leaf4 | e6753b1d099e | srlinux:21.6.1-235 | srl | | running | 172.20.20.12/24 | 2001:172:20:20::c/64 |

| 9 | clab-lab-srl-cum-spine1 | a7e63993d052 | networkop/cx:4.3.0 | cvx | | running | 172.20.20.5/24 | 2001:172:20:20::5/64 |

| 10 | clab-lab-srl-cum-spine2 | 3124250d5b75 | networkop/cx:4.3.0 | cvx | | running | 172.20.20.3/24 | 2001:172:20:20::3/64 |

| 11 | clab-lab-srl-cum-spine3 | 5e300ca3f66f | srlinux:21.6.1-235 | srl | | running | 172.20.20.11/24 | 2001:172:20:20::b/64 |

| 12 | clab-lab-srl-cum-spine4 | 0a59e60a596b | srlinux:21.6.1-235 | srl | | running | 172.20.20.13/24 | 2001:172:20:20::d/64 |

| 13 | clab-lab-srl-cum-superspine1 | 3d9935413fc2 | networkop/cx:4.3.0 | cvx | | running | 172.20.20.8/24 | 2001:172:20:20::8/64 |

| 14 | clab-lab-srl-cum-superspine2 | 5bc58d423e2c | srlinux:21.6.1-235 | srl | | running | 172.20.20.15/24 | 2001:172:20:20::f/64 |

+----+------------------------------+--------------+---------------------------------+-------+-------+---------+-----------------+----------------------+

The containerlab program creates the updates into the host file + generates an ansible inventory file.

There are different ways to connect onto the docker instances, within docker exec commands or SSH directly the IP address related above.

For example :

sudo docker exec -it clab-lab-srl-cum-spine1 bash

-->>> allows to launch a bash on the cumulus device (then you run the different net commands)

sudo docker exec -it clab-lab-srl-cum-leaf2 sr_cli

-->>> allows to launch the cli on a SRLinux device

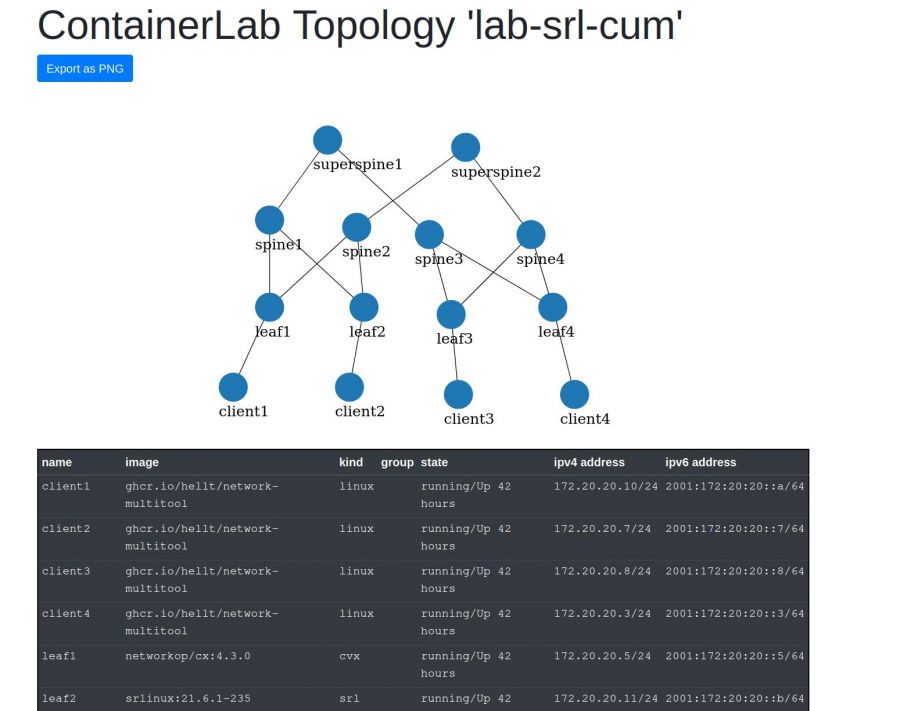

To end this setup preparation, the containerlab tool integrates an option to launch a webserver showing the lab topology in graph + node details

By default, it's listening on port 50080 of the host

Then, you can access to the following web page showing the topology + lab infos

Finally, if you want to stop a lab, please run ''containerlab destroy --topo <topology>.yml''

At this step, nodes are running, connections are done, but configurations are empty.

So, it's time to configure them :)

Configuration files are attached to this blog. Once validated, they are exportable and can be called by the tool when launching the lab. Below how to do it :

Firstable, once you ve finished your "hand made config", you can run the following command to save it :

containerlab save -t quickstart.clab.yml

To be noted it's only working for Nokia nodes in this lab. CVX configurations will have to be called by another method (or pushed manually)

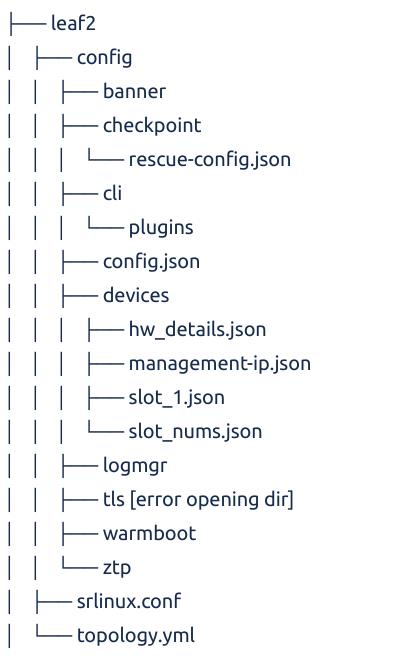

It will create one folder per node into the lab folder which gives the following tree :

Note: For Cumulus, you can apparently retrieve configurations files within adding the following parameters into lab config file. To be tested. some lab samples here : https://github.com/hellt/clabs/blob/main/labs/cvx04/symm-mh.clab.yml

Now we have the lab running, below the different commands to check that all is working as expected :

-------------------------------------------

Check L2 tables ///

-------------------------------------------

Nokia :

A:leaf2# show network-instance mac-vrf-100 bridge-table mac-table all

----------------------------------------------------------------------------------------------------------------------------------------------------------

Mac-table of network instance mac-vrf-100

----------------------------------------------------------------------------------------------------------------------------------------------------------

+--------------------+-----------------------------------------+------------+-------------+---------+--------+-----------------------------------------+

| Address | Destination | Dest Index | Type | Active | Aging | Last Update |

+====================+=========================================+============+=============+=========+========+=========================================+

| AA:C1:AB:0C:60:D6 | vxlan-interface:vxlan1.100 | 6379518792 | evpn | true | N/A | 2021-10-08T13:06:17.000Z |

| | vtep:10.255.255.8 vni:100 | 67 | | | | |

| AA:C1:AB:23:31:04 | ethernet-1/3.100 | 2 | learnt | true | 300 | 2021-10-08T13:06:10.000Z |

| AA:C1:AB:4B:FF:71 | vxlan-interface:vxlan1.100 | 6379518792 | evpn | true | N/A | 2021-10-08T12:55:28.000Z |

| | vtep:10.255.255.5 vni:100 | 60 | | | | |

| AA:C1:AB:B5:79:50 | vxlan-interface:vxlan1.100 | 6379518792 | evpn | true | N/A | 2021-10-08T12:43:37.000Z |

| | vtep:10.255.255.7 vni:100 | 64 | | | | |

+--------------------+-----------------------------------------+------------+-------------+---------+--------+-----------------------------------------+

Total Irb Macs : 0 Total 0 Active

Total Static Macs : 0 Total 0 Active

Total Duplicate Macs : 0 Total 0 Active

Total Learnt Macs : 1 Total 1 Active

Total Evpn Macs : 3 Total 3 Active

Total Evpn static Macs : 0 Total 0 Active

Total Irb anycast Macs : 0 Total 0 Active

Total Macs : 4 Total 4 Active

----------------------------------------------------------------------------------------------------------------------------------------------------------

--{ + running }--[ ]--

Note : type learnt matches the local learnt mac, evpn are learnt within VXLAN

Cumulus :

root@leaf1-cum-dc1:/# net show bridge macs vlan 100

VLAN Master Interface MAC TunnelDest State Flags LastSeen

---- ------ --------- ----------------- ---------- --------- ------------ --------

100 bridge swp3 aa:c1:ab:4b:ff:71 00:00:02

100 bridge swp3 aa:c1:ab:4f:f2:e7 permanent 02:17:21

100 bridge vni100 22:2d:67:f1:bb:26 permanent 02:17:21

100 bridge vni100 aa:c1:ab:0c:60:d6 extern_learn <1 sec

100 bridge vni100 aa:c1:ab:23:31:04 extern_learn 00:00:07

100 bridge vni100 aa:c1:ab:b5:79:50 extern_learn 00:13:28

root@leaf1-cum-dc1:/# net show bridge macs vlan 200

VLAN Master Interface MAC TunnelDest State Flags LastSeen

---- ------ --------- ----------------- ---------- --------- ------------ --------

200 bridge swp3 aa:c1:ab:4b:ff:71 00:08:46

200 bridge swp3 aa:c1:ab:4f:f2:e7 permanent 02:16:36

200 bridge vni200 00:00:5e:00:01:01 static sticky 00:14:26

200 bridge vni200 1e:0d:aa:46:53:b1 permanent 02:16:36

200 bridge vni200 02:77:6b:ff:00:41 static sticky 01:00:52

200 bridge vni200 02:f8:b8:ff:00:41 static sticky 00:14:26

200 bridge vni200 aa:c1:ab:b5:79:50 extern_learn 00:11:51

200 bridge vni10200 02:77:6b:ff:00:00 extern_learn 00:53:02

200 bridge vni10200 02:f8:b8:ff:00:00 extern_learn 00:14:26

200 bridge vni10200 86:5e:aa:ca:50:be permanent 02:16:36

Note : the macs with permanent flag are local interfaces, physical ou logic (vtep)

Note2 : extern_learn mac are the one learned via the VXLAN infra (and mapped to the vnixxx interface)

-------------------------------------------

Check arp tables ///

-------------------------------------------

Nokia :

:leaf2# show arpnd arp-entries

+---------------+---------------+-----------------+---------------+-----------------------------+---------------------------------------------------------+

| Interface | Subinterface | Neighbor | Origin | Link layer address | Expiry |

+===============+===============+=================+===============+=============================+=========================================================+

| ethernet-1/1 | 0 | 10.254.254.13 | dynamic | AA:C1:AB:59:65:EA | 3 hours from now |

| ethernet-1/2 | 0 | 10.254.254.15 | dynamic | AA:C1:AB:CB:F8:8A | 3 hours from now |

| irb0 | 200 | 192.168.2.1 | evpn | AA:C1:AB:4B:FF:71 | |

| irb0 | 200 | 192.168.2.2 | dynamic | AA:C1:AB:23:31:04 | 3 hours from now |

| mgmt0 | 0 | 172.20.20.1 | dynamic | 02:42:63:9E:74:03 | 2 hours from now |

+---------------+---------------+-----------------+---------------+-----------------------------+---------------------------------------------------------+

----------------------------------------------------------------------------------------------------------------------------------------------------------

Total entries : 5 (0 static, 5 dynamic)

----------------------------------------------------------------------------------------------------------------------------------------------------------

--{ + running }--[ ]--

Note : on Nokia you have to sort the entries basing on subinterface and the retrieve the L3VPN attached

A:leaf2# show arpnd arp-entries subinterface 200

+---------------+---------------+-----------------+---------------+-----------------------------+---------------------------------------------------------+

| Interface | Subinterface | Neighbor | Origin | Link layer address | Expiry |

+===============+===============+=================+===============+=============================+=========================================================+

| irb0 | 200 | 192.168.2.1 | evpn | AA:C1:AB:4B:FF:71 | |

| irb0 | 200 | 192.168.2.2 | dynamic | AA:C1:AB:23:31:04 | 3 hours from now |

+---------------+---------------+-----------------+---------------+-----------------------------+---------------------------------------------------------+

----------------------------------------------------------------------------------------------------------------------------------------------------------

Total entries : 2 (0 static, 2 dynamic)

----------------------------------------------------------------------------------------------------------------------------------------------------------

--{ + running }--[ ]--

Cumulus :

Here we retrieve all arp infos linked to evpn

root@leaf1-cum-dc1:/# net show evpn arp-cache vni all

VNI 100 #ARP (IPv4 and IPv6, local and remote) 2

Flags: I=local-inactive, P=peer-active, X=peer-proxy

Neighbor Type Flags State MAC Remote ES/VTEP Seq #'s

192.168.1.3 remote active aa:c1:ab:b5:79:50 10.255.255.7 0/0

192.168.1.1 local active aa:c1:ab:4b:ff:71 0/0

VNI 200 #ARP (IPv4 and IPv6, local and remote) 4

Flags: I=local-inactive, P=peer-active, X=peer-proxy

Neighbor Type Flags State MAC Remote ES/VTEP Seq #'s

192.168.2.1 local active aa:c1:ab:4b:ff:71 0/0

192.168.200.254 remote active 00:00:5e:00:01:01 10.255.255.8 0/0

192.168.2.254 remote active 00:00:5e:00:01:01 10.255.255.6 0/0

192.168.200.1 remote active aa:c1:ab:b5:79:50 10.255.255.7 0/0

VNI 400 #ARP (IPv4 and IPv6, local and remote) 0

-------------------------------------------

Check bgp sessions' status - underlay ///

-------------------------------------------

Nokia :

A:spine3# show network-instance default protocols bgp neighbor | grep ipv4

| default | 10.254.254.19 | udl-superspine | S | 65003 | established | 0d:0h:22m:44s | ipv4-unicast | [13/4/4] |

| default | 10.254.254.22 | udl-leaf | S | 65013 | established | 0d:0h:22m:52s | ipv4-unicast | [13/1/4] |

Cumulus :

root@leaf3:/# net show bgp ipv4 unicast summary

BGP router identifier 10.255.255.7, local AS number 65013 vrf-id 0

BGP table version 13

RIB entries 25, using 4800 bytes of memory

Peers 2, using 43 KiB of memory

Neighbor V AS MsgRcvd MsgSent TblVer InQ OutQ Up/Down State/PfxRcd PfxSnt

10.254.254.23 4 65002 466 403 0 0 0 00:19:34 4 13

10.254.254.25 4 65002 434 355 0 0 0 00:17:10 8 13

-------------------------------------------

Check bgp sessions' status - overlay ///

-------------------------------------------

Nokia :

A:spine3# show network-instance default protocols bgp neighbor | grep evpn

| default | 10.255.255.7 | ovl-leaf | S | 65013 | established | 0d:0h:24m:14s| evpn | [15/0/11] |

| default | 10.255.255.9 | ovl-superspine | S | 65003 | established | 0d:0h:24m:7s | evpn | [11/0/4] |

Cumulus :

root@leaf3-cum-dc1:/# net show bgp evpn summary

BGP router identifier 10.255.255.7, local AS number 65013 vrf-id 0

BGP table version 0

RIB entries 29, using 5568 bytes of memory

Peers 2, using 43 KiB of memory

Neighbor V AS MsgRcvd MsgSent TblVer InQ OutQ Up/Down State/PfxRcd PfxSnt

10.255.255.3 4 65002 5618 4742 0 0 0 03:53:35 19 27

10.255.255.4 4 65002 5565 4694 0 0 0 03:51:11 19 27

-------------------------------------------

Check EVPN L2 tables///

-------------------------------------------

Nokia :

<didn't spotted how to show evpn routes in a bgp fashion>

Cumulus :

root@leaf3-cum-dc1:/# net show bgp evpn route type 2

BGP table version is 17, local router ID is 10.255.255.7

Status codes: s suppressed, d damped, h history, * valid, > best, i - internal

Origin codes: i - IGP, e - EGP, ? - incomplete

EVPN type-1 prefix: [1]:[ESI]:[EthTag]:[IPlen]:[VTEP-IP]

EVPN type-2 prefix: [2]:[EthTag]:[MAClen]:[MAC]:[IPlen]:[IP]

EVPN type-3 prefix: [3]:[EthTag]:[IPlen]:[OrigIP]

EVPN type-4 prefix: [4]:[ESI]:[IPlen]:[OrigIP]

EVPN type-5 prefix: [5]:[EthTag]:[IPlen]:[IP]

Network Next Hop Metric LocPrf Weight Path

Extended Community

Route Distinguisher: 10.255.255.5:100

* [2]:[0]:[48]:[aa:c1:ab:4b:ff:71]

10.255.255.5 0 65002 65003 65001 65011 i

RT:65000:100 ET:8

*> [2]:[0]:[48]:[aa:c1:ab:4b:ff:71]

10.255.255.5 0 65002 65003 65001 65011 i

RT:65000:100 ET:8

* [2]:[0]:[48]:[aa:c1:ab:4b:ff:71]:[32]:[192.168.1.1]

10.255.255.5 0 65002 65003 65001 65011 i

RT:65000:100 ET:8

*> [2]:[0]:[48]:[aa:c1:ab:4b:ff:71]:[32]:[192.168.1.1]

10.255.255.5 0 65002 65003 65001 65011 i

RT:65000:100 ET:8

Route Distinguisher: 10.255.255.5:200

* [2]:[0]:[48]:[aa:c1:ab:4b:ff:71]

10.255.255.5 0 65002 65003 65001 65011 i

RT:65000:200 RT:65011:10200 ET:8 Rmac:aa:c1:ab:4f:f2:e7

*> [2]:[0]:[48]:[aa:c1:ab:4b:ff:71]

10.255.255.5 0 65002 65003 65001 65011 i

RT:65000:200 RT:65011:10200 ET:8 Rmac:aa:c1:ab:4f:f2:e7

* [2]:[0]:[48]:[aa:c1:ab:4b:ff:71]:[32]:[192.168.2.1]

10.255.255.5 0 65002 65003 65001 65011 i

RT:65000:200 RT:65011:10200 ET:8 Rmac:aa:c1:ab:4f:f2:e7

*> [2]:[0]:[48]:[aa:c1:ab:4b:ff:71]:[32]:[192.168.2.1]

10.255.255.5 0 65002 65003 65001 65011 i

RT:65000:200 RT:65011:10200 ET:8 Rmac:aa:c1:ab:4f:f2:e7

Route Distinguisher: 10.255.255.6:200

* [2]:[0]:[48]:[00:00:5e:00:01:01]:[32]:[192.168.2.254]

10.255.255.6 0 65002 65003 65001 65012 i

RT:65000:200 ET:8 MM:0, sticky MAC

*> [2]:[0]:[48]:[00:00:5e:00:01:01]:[32]:[192.168.2.254]

10.255.255.6 0 65002 65003 65001 65012 i

RT:65000:200 ET:8 MM:0, sticky MAC

* [2]:[0]:[48]:[02:77:6b:ff:00:41]

10.255.255.6 0 65002 65003 65001 65012 i

RT:65000:200 ET:8 MM:0, sticky MAC

*> [2]:[0]:[48]:[02:77:6b:ff:00:41]

10.255.255.6 0 65002 65003 65001 65012 i

RT:65000:200 ET:8 MM:0, sticky MAC

Route Distinguisher: 10.255.255.7:100

*> [2]:[0]:[48]:[aa:c1:ab:b5:79:50]

10.255.255.7 32768 i

ET:8 RT:65000:100

*> [2]:[0]:[48]:[aa:c1:ab:b5:79:50]:[32]:[192.168.1.3]

10.255.255.7 32768 i

ET:8 RT:65000:100

Route Distinguisher: 10.255.255.7:200

*> [2]:[0]:[48]:[aa:c1:ab:b5:79:50]

10.255.255.7 32768 i

ET:8 RT:65000:200

*> [2]:[0]:[48]:[aa:c1:ab:b5:79:50]:[32]:[192.168.200.1]

10.255.255.7 32768 i

ET:8 RT:65000:200

Route Distinguisher: 10.255.255.8:200

* [2]:[0]:[48]:[00:00:5e:00:01:01]:[32]:[192.168.200.254]

10.255.255.8 0 65002 65014 i

RT:65000:200 ET:8 MM:0, sticky MAC

*> [2]:[0]:[48]:[00:00:5e:00:01:01]:[32]:[192.168.200.254]

10.255.255.8 0 65002 65014 i

RT:65000:200 ET:8 MM:0, sticky MAC

* [2]:[0]:[48]:[02:f8:b8:ff:00:41]

10.255.255.8 0 65002 65014 i

RT:65000:200 ET:8 MM:0, sticky MAC

*> [2]:[0]:[48]:[02:f8:b8:ff:00:41]

10.255.255.8 0 65002 65014 i

RT:65000:200 ET:8 MM:0, sticky MAC

and if we focus on our vni 100

root@leaf3-cum-dc1:/# net show bgp evpn route vni 100 type 2

BGP table version is 48, local router ID is 10.255.255.7

Status codes: s suppressed, d damped, h history, * valid, > best, i - internal

Origin codes: i - IGP, e - EGP, ? - incomplete

EVPN type-1 prefix: [1]:[ESI]:[EthTag]:[IPlen]:[VTEP-IP]

EVPN type-2 prefix: [2]:[EthTag]:[MAClen]:[MAC]:[IPlen]:[IP]

EVPN type-3 prefix: [3]:[EthTag]:[IPlen]:[OrigIP]

EVPN type-4 prefix: [4]:[ESI]:[IPlen]:[OrigIP]

EVPN type-5 prefix: [5]:[EthTag]:[IPlen]:[IP]

Network Next Hop Metric LocPrf Weight Path

* [2]:[0]:[48]:[aa:c1:ab:4b:ff:71]

10.255.255.5 0 65002 65003 65001 65011 i

RT:65000:100 ET:8

*> [2]:[0]:[48]:[aa:c1:ab:4b:ff:71]

10.255.255.5 0 65002 65003 65001 65011 i

RT:65000:100 ET:8

* [2]:[0]:[48]:[aa:c1:ab:4b:ff:71]:[32]:[192.168.1.1]

10.255.255.5 0 65002 65003 65001 65011 i

RT:65000:100 ET:8

*> [2]:[0]:[48]:[aa:c1:ab:4b:ff:71]:[32]:[192.168.1.1]

10.255.255.5 0 65002 65003 65001 65011 i

RT:65000:100 ET:8

*> [2]:[0]:[48]:[aa:c1:ab:b5:79:50]

10.255.255.7 32768 i

ET:8 RT:65000:100

*> [2]:[0]:[48]:[aa:c1:ab:b5:79:50]:[32]:[192.168.1.3]

10.255.255.7 32768 i

ET:8 RT:65000:100

The additional route type "MAC+IP" is optional and is useful/mandatory when you do anycast irb.

-------------------------------------------

Check tables EVPN L3 ///

-------------------------------------------

Nokia :

--{ + running }--[ ]--

A:leaf2# show network-instance ip-vrf-200 route-table all

-----------------------------------------------------------------------------------------------------------------------------------------------------------

IPv4 Unicast route table of network instance ip-vrf-200

-----------------------------------------------------------------------------------------------------------------------------------------------------------

+-----------------------+-------+------------+----------------------+----------------------+----------+---------+--------------+--------------+

| Prefix | ID | Route Type | Route Owner | Best/Fib- | Metric | Pref | Next-hop | Next-hop |

| | | | | status(slot) | | | (Type) | Interface |

+=======================+=======+============+======================+======================+==========+=========+==============+==============+

| 192.168.2.0/24 | 9 | local | net_inst_mgr | True/success | 0 | 0 | 192.168.2.25 | irb0.200 |

| | | | | | | | 4 (direct) | |

| 192.168.2.254/32 | 9 | host | net_inst_mgr | True/success | 0 | 0 | None | None |

| | | | | | | | (extract) | |

| 192.168.2.255/32 | 9 | host | net_inst_mgr | True/success | 0 | 0 | None | None |

| | | | | | | | (broadcast) | |

| 192.168.200.0/24 | 0 | bgp-evpn | bgp_evpn_mgr | True/success | 0 | 170 | 10.255.255.8 | None |

| | | | | | | | (indirect/vx | |

| | | | | | | | lan) | |

+-----------------------+-------+------------+----------------------+----------------------+----------+---------+--------------+--------------+

-----------------------------------------------------------------------------------------------------------------------------------------------------------

4 IPv4 routes total

4 IPv4 prefixes with active routes

0 IPv4 prefixes with active ECMP routes

-----------------------------------------------------------------------------------------------------------------------------------------------------------

--{ + running }--[ ]--

A:leaf2#

<didn't spotted how to show evpn routes in a bgp fashion>

Cumulus :

root@leaf1-cum-dc1:/# net show route vrf ip-vrf-200

show ip route vrf ip-vrf-200

=============================

Codes: K - kernel route, C - connected, S - static, R - RIP,

O - OSPF, I - IS-IS, B - BGP, E - EIGRP, N - NHRP,

T - Table, v - VNC, V - VNC-Direct, A - Babel, D - SHARP,

F - PBR, f - OpenFabric,

> - selected route, * - FIB route, q - queued, r - rejected, b - backup

t - trapped, o - offload failure

VRF ip-vrf-200:

K>* 0.0.0.0/0 [255/8192] unreachable (ICMP unreachable), 04:48:42

B 192.168.2.0/24 [20/0] via 10.255.255.6, vlan200 onlink, weight 1, 04:27:26

C>* 192.168.2.0/24 is directly connected, vlan200, 04:48:42

B>* 192.168.200.0/24 [20/0] via 10.255.255.8, vlan200 onlink, weight 1, 03:48:49

root@leaf1-cum-dc1:/# net show bgp evpn route type 5

BGP table version is 3, local router ID is 10.255.255.5

Status codes: s suppressed, d damped, h history, * valid, > best, i - internal

Origin codes: i - IGP, e - EGP, ? - incomplete

EVPN type-1 prefix: [1]:[ESI]:[EthTag]:[IPlen]:[VTEP-IP]

EVPN type-2 prefix: [2]:[EthTag]:[MAClen]:[MAC]:[IPlen]:[IP]

EVPN type-3 prefix: [3]:[EthTag]:[IPlen]:[OrigIP]

EVPN type-4 prefix: [4]:[ESI]:[IPlen]:[OrigIP]

EVPN type-5 prefix: [5]:[EthTag]:[IPlen]:[IP]

Network Next Hop Metric LocPrf Weight Path

Extended Community

Route Distinguisher: 10.255.255.6:10200

* [5]:[0]:[24]:[192.168.2.0]

10.255.255.6 0 65001 65012 i

RT:65000:10200 ET:8 Rmac:02:77:6b:ff:00:00

*> [5]:[0]:[24]:[192.168.2.0]

10.255.255.6 0 65001 65012 i

RT:65000:10200 ET:8 Rmac:02:77:6b:ff:00:00

Route Distinguisher: 10.255.255.8:10200

*> [5]:[0]:[24]:[192.168.200.0]

10.255.255.8 0 65001 65003 65002 65014 i

RT:65000:10200 ET:8 Rmac:02:f8:b8:ff:00:00

-------------------------------------------

Check arp hosts ///

-------------------------------------------

From client #1 /

bash-5.0# arp

Address HWtype HWaddress Flags Mask Iface

192.168.1.3 ether aa:c1:ab:b5:79:50 C eth1.100

192.168.1.4 ether aa:c1:ab:0c:60:d6 C eth1.100

192.168.2.2 ether aa:c1:ab:23:31:04 C eth1.200

192.168.2.254 ether aa:c1:ab:4f:f2:e7 C eth1.200

192.168.1.2 ether aa:c1:ab:23:31:04 C eth1.100

containerlab.local ether 02:42:63:9e:74:03 C eth0

-------------------------------------------

Ping ///

-------------------------------------------

From client #1 /

L2 service ping (vlan 100) ----------------------

bash-5.0# ping -c 1 192.168.1.2

PING 192.168.1.2 (192.168.1.2) 56(84) bytes of data.

64 bytes from 192.168.1.2: icmp_seq=1 ttl=64 time=0.812 ms

--- 192.168.1.2 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.812/0.812/0.812/0.000 MsgRcvd

bash-5.0# ping -c 1 192.168.1.3

PING 192.168.1.3 (192.168.1.3) 56(84) bytes of data.

64 bytes from 192.168.1.3: icmp_seq=1 ttl=64 time=1.40 ms

--- 192.168.1.3 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 1.403/1.403/1.403/0.000 ms

bash-5.0# ping -c 1 192.168.1.4

PING 192.168.1.4 (192.168.1.4) 56(84) bytes of data.

64 bytes from 192.168.1.4: icmp_seq=1 ttl=64 time=1.62 ms

--- 192.168.1.4 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 1.619/1.619/1.619/0.000 ms

L3 service ping (vlan 200)

bash-5.0# ping -c 1 192.168.2.1

PING 192.168.2.1 (192.168.2.1) 56(84) bytes of data.

64 bytes from 192.168.2.1: icmp_seq=1 ttl=64 time=0.053 ms

--- 192.168.2.1 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 1ms

rtt min/avg/max/mdev = 0.053/0.053/0.053/0.000 ms

bash-5.0# ^C

bash-5.0# ping -c 1 192.168.2.2

PING 192.168.2.2 (192.168.2.2) 56(84) bytes of data.

64 bytes from 192.168.2.2: icmp_seq=1 ttl=64 time=0.611 ms

--- 192.168.2.2 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.611/0.611/0.611/0.000 ms

-------------------------------------------

Traceroute ///

-------------------------------------------

L3 service traceroute (vlan 200)

bash-5.0# traceroute 192.168.200.1

traceroute to 192.168.200.1 (192.168.200.1), 30 hops max, 46 byte packets

1 192.168.2.254 (192.168.2.254) 0.012 ms 0.010 ms 0.007 ms

2 192.168.200.254 (192.168.200.254) 2.751 ms * 2.549 ms

3 192.168.200.1 (192.168.200.1) 2.084 ms 1.805 ms 1.761 ms

To come back on the setup, we wanted to implement solutions inspired by the RFC 7938 rfc7938 (see section 5.2.1)

- eBGP will be used for Underlay and Overlay

- eBGP single-hop sessions are established over direct point-to-point links interconnecting the network nodes, no multi-hop or loopback sessions are used, even in the case of multiple links between the same pair of nodes.

- Private Use ASNs from the range 64512-65534 are used to avoid ASN conflicts.

- A single ASN is allocated to all of the Clos topology's Tier 1 devices.

- A unique ASN is allocated to each set of Tier 2 devices in the same cluster.

- A unique ASN is allocated to every Tier 3 device (e.g., ToR) in this topology.

In add, depending of network nodes, it will be interesting to :

- Add fast-failover option for underlay + add link-debounce on links to limit possible impacts in case of link flaps. BFD could be considered in some cases

- Have the best ECMP hashing algorythm on leaf nodes. On tier-1/tier-2 levels hashing will be based on underlay part (trafic inter-vtep), so L3-L4 decision, but some models can retreive the vni-id if I well remember.

- Implement the "multipath relax" since we could have multiple AS paths for a same destination.

- Implement gshut community on underlay (on tier-1/tier-2) or at least policies to prepend underlay routes to avoid some nodes in case of maintenance.

Thoughts/Points to deepend

On leaf nodes, LACP over multiple nodes is feasible within ESI, but impact on incidents/maintenance can vary depending on the vendors.

Tests need to be done to check that.

Some sysadmin would like to KISS and have client servers connected in active/passive bonding, with eventually single-leaf ports aggregation.

Note : NH ESI in FIB management on ingress nodes can have some limitations (numbers of installable next-hop, load-balancing, etc. oftenly linked to the node chipset)

Another important engineering point to define at the beginning is : Do we authorize multisite L2 propagation or not ?

From my point-of-view, the smaller are the L2 segments the better it is. I would suggest Local L2 segments wit anycast gateways provided by Leaf switches, with L3 routing between DCs within EVPN type5 routes sharing only. Nevertheless, some business requirements for endcustomers can keep extended L2 alive.

We have to be careful when implementing Consistent hashing. ex: https://docs.nvidia.com/networking-ethernet-software/cumulus-linux-44/Layer-3/Routing/Equal-Cost-Multipath-Load-Sharing-Hardware-ECMP/

If possible activate it per VRF, not globally, and restrict on dedicated subnets << depends of the vendor, it can be dangerous regarding memory management

Think at configuring the bgp option to not advertise routes to peers before they are installed in FIB.

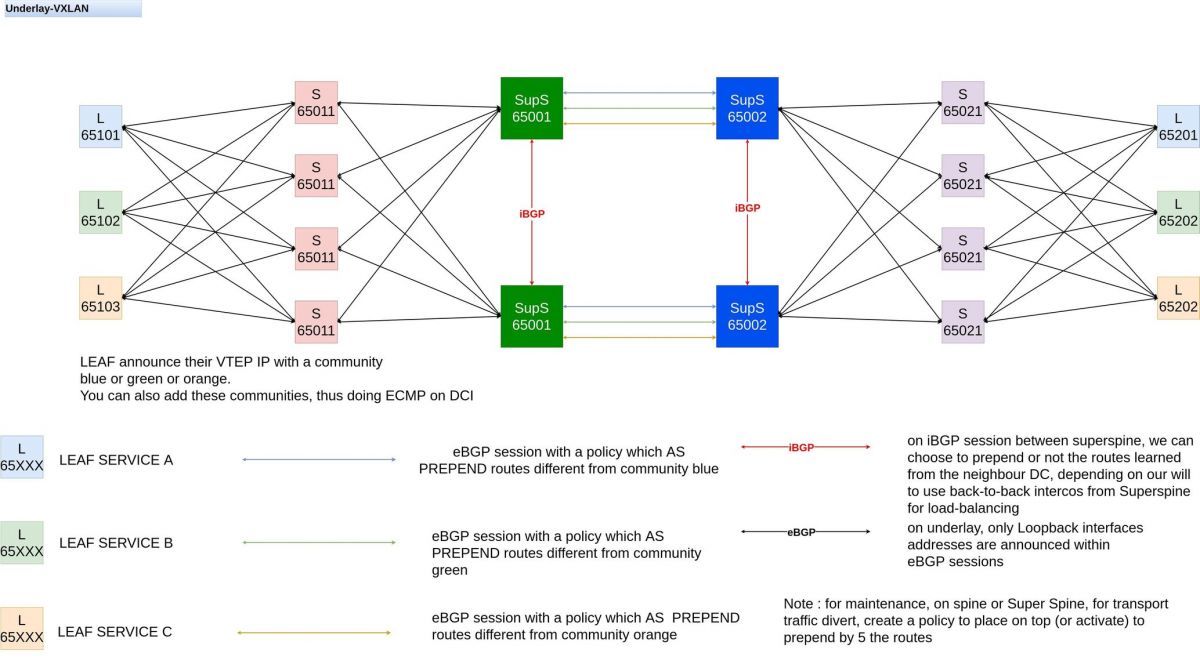

We can implement leaf roles in some cases, to be able to do "light traffic engineering " on DCI links in some setup.

These roles will be managed within bgp communities added to VTEP /32. Look at the diagram below. Depending of the colour, the eBGP sessions between SupS will prepend or not the VTEP IPs announced to the remote DC

To be continued

Lab archives

Reminder : following config are for lab, so please don't pay attention on typos/lack of security params/unused config. Thanks \o/

Credentials

SRLinux : admin/<no_password>

CVX : cumulus/cumulus

Lab archives

layer 2 (vlan 100) + layer 3 (vlan 200) lab, 10/12/2021 mini If you want to exchange about that lab, those topics, please don't hesitate to contact us

SONiC Lab

<to be noted that those informations are included into the readme.md file into the archive>

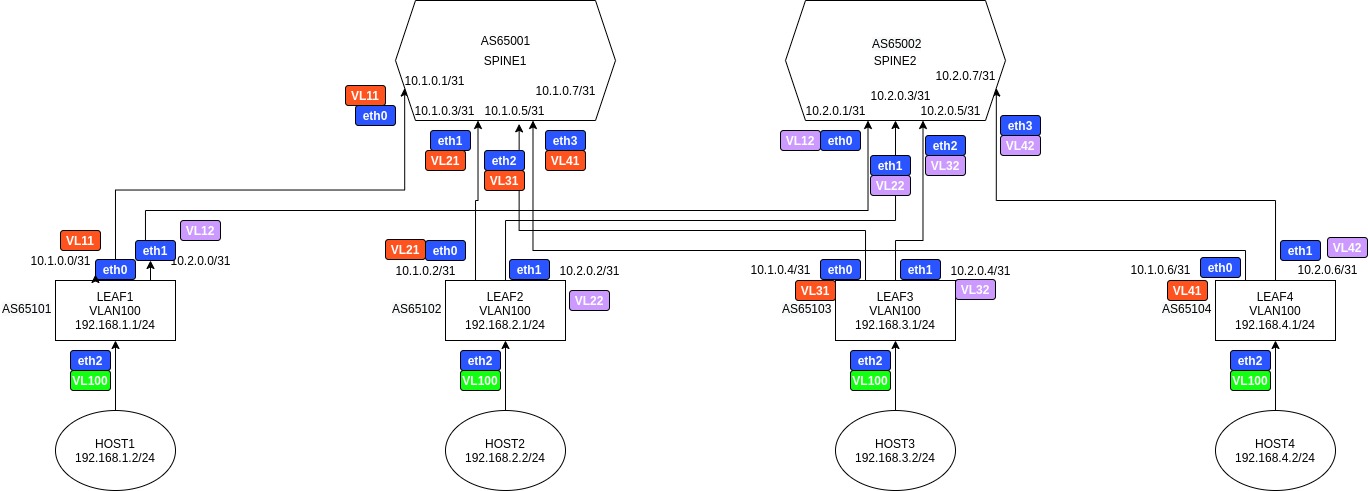

Lab diagram

Lab archive Attach:sonic-lab-20201030.zip

This lab is a forked lab from many found on @, starting from Microsoft one : https://github.com/Azure/SONiC/wiki/SONiC-P4-Software-Switch

The target of this lab is to simulate a simple IP/Fabric in SPINE/LEAF topology. Each Leaf provide local L2 connectivity + L3 gateways to hosts. Flows between hosts from different leaf are L3 routed, in a "node path" LEAF-SPINE-LEAF.

Nothing complicated, but it allows to put a first foot into SONiC world :)

For the moment, as far as we know, MC-LAG + EVPN/VXLAN features are only available on entreprise SONiC versions. Tests to come once physic switches in enterprise version will be available in our lab.

The diagram of the architecture is into the zip file (SONIC_LAB.jpg)

To run the lab you need :

- to have installed docker by using ./install_docker_ovs.sh - to run the ./load_image.sh in order to get the Sonic image

Note : don't change file / folders names of network devices (leafX, spineX)

Once you're ready, please run the lab by launching "./start.sh"

The script :

* launches the containers without Docket network features * create network namespaces * build linux bridges to interconnect the containers * configure host IPs * launch startup sonic scripts into sonic devices * once devices started, checks + ping tests are run. If all BGP sessions are ok on spine + all ping are fine, the setup works well.

The "start" script has several comments, perhaps without the right vocabulary, so please be tolerant :)

If you want to stop the lab, please launch "./stop.sh"

Below some useful commands to run for sonic containers :

run ping

sudo docker exec -it <container> ping <ip>

This command triggers ping into the targetted container :

plancastre@dockerhost:~/00.Projects/p4-version-pla$ sudo docker exec -it leaf1 ping 192.168.1.1

PING 192.168.1.1 (192.168.1.1): 56 data bytes

64 bytes from 192.168.1.1: icmp_seq=0 ttl=64 time=0.052 ms

64 bytes from 192.168.1.1: icmp_seq=1 ttl=64 time=0.034 ms

^C--- 192.168.1.1 ping statistics ---

check bgp sessions

sudo docker exec -it <container> vtysh -c "show ip bgp sum"

This command sollicitates the SONiC shell of the targeted container, in order to print the bgp status. Below, we run it on one spine, you should have 4 sessiosn UP (for the 4 leaves)

plancastre@dockerhost:~/00.Projects/p4-version-pla$ sudo docker exec -it spine1 vtysh -c "show ip bgp sum"

BGP router identifier 10.1.0.1, local AS number 65001

RIB entries 8, using 896 bytes of memory

Peers 4, using 18 KiB of memory

Neighbor V AS MsgRcvd MsgSent TblVer InQ OutQ Up/Down State/PfxRcd

10.1.0.0 4 65101 6409 6411 0 0 0 01:46:46 3

10.1.0.2 4 65102 6406 6410 0 0 0 01:46:44 3

10.1.0.4 4 65103 6407 6410 0 0 0 01:46:44 4

10.1.0.6 4 65104 6406 6412 0 0 0 01:46:46 1

Total number of neighbors 4

check ip route

sudo docker exec -it <container> vtysh -c "show ip route" This command sollicitates the SONiC shell of the targeted container, in order to print its routing table? plancastre@dockerhost:~/00.Projects/p4-version-pla$ sudo docker exec -it spine1 vtysh -c "show ip route" Codes: K - kernel route, C - connected, S - static, R - RIP, O - OSPF, I - IS-IS, B - BGP, P - PIM, A - Babel, > - selected route, * - FIB route C>* 10.1.0.0/31 is directly connected, Vlan11 C>* 10.1.0.2/31 is directly connected, Vlan21 C>* 10.1.0.4/31 is directly connected, Vlan31 C>* 10.1.0.6/31 is directly connected, Vlan41 C>* 127.0.0.0/8 is directly connected, lo B>* 192.168.1.0/24 [20/0] via 10.1.0.0, Vlan11, 01:47:23 B>* 192.168.2.0/24 [20/0] via 10.1.0.2, Vlan21, 01:47:21 B>* 192.168.3.0/24 [20/0] via 10.1.0.4, Vlan31, 01:47:21 B>* 192.168.4.0/24 [20/0] via 10.1.0.6, Vlan41, 01:47:23

For more commands check SONiC docs : https://github.com/Azure/sonic-utilities/blob/master/doc/Command-Reference.md

To finish, below the output of the start.sh when all goes well

plancastre@dockerhost:~/00.Projects/p4-version-pla$ ./start.sh --------------------------------------------------- Creating the containers: starting --------------------------------------------------- 0b4bbb3c6cfc9895466ea13a9e940d1a176d66f45deee9752e9b4732d735bb03 5ca9486baab3e8a5259062167132a944eee717875d3cc1ef29fe490cba3184c1 46c9c09dba27a32715b9fbe0bc434a9284b9d59317d278df693f425cb669f037 898e6afbcb2977115acbba2a69d069796cfd70fb90228b6c18e09ba4584365f7 eb9b3ef6d14784c9ffe4d9d5df3e921a24559889cb8770d226263aad6761182a ae5f3b2c628da687eaabb331dc2cd989e71bcb386854e7a0be6ec587df99d49a 122197bffd7566a01ce3b6565fdfb6eb893aab7d5236f14e5a9bbbe95037ced2 a604520a080e2a2184598ad1d915c0c182d16e5185033373e95a4772d8978a67 0c49e18d78b2aa7c8ef8e937b60285aa56ec0bf4a8530226285270a085e11d5c eb66336b8c6bd8333f1f4f685369e6cac973b817c35bb7126ac4d7ba72ef71bf --------------------------------------------------- Retreiving PID from docker containers --------------------------------------------------- --------------------------------------------------- Creating network namespaces for containers --------------------------------------------------- List of created namespaces ns_host4 ns_host3 ns_host2 ns_host1 ns_spine2 ns_spine1 ns_leaf4 ns_leaf3 ns_leaf2 ns_leaf1 --------------------------------------------------- Creating network connectivity between containers --------------------------------------------------- --------------------------------------------------- Now assigning IPs to hosts (host1 to host4) --------------------------------------------------- --------------------------------------------------- Configuration of containers is now finished --------------------------------------------------- --------------------------------------------------- Now booting switches, please wait 2 minutes for switches to load --------------------------------------------------- --------------------------------------------------- 2 minutes period is finished --------------------------------------------------- --------------------------------------------------- Now fixing the iptables --------------------------------------------------- --------------------------------------------------- Backend actions are now finished --------------------------------------------------- --------------------------------------------------- Now we'll check in 15 sec that BGP peers are all Up --------------------------------------------------- --------------------------------------------------- SPINE1 check BGP Sessions --------------------------------------------------- BGP router identifier 10.1.0.1, local AS number 65001 RIB entries 8, using 896 bytes of memory Peers 4, using 18 KiB of memory Neighbor V AS MsgRcvd MsgSent TblVer InQ OutQ Up/Down State/PfxRcd 10.1.0.0 4 65101 94 97 0 0 0 00:01:28 4 10.1.0.2 4 65102 95 97 0 0 0 00:01:28 5 10.1.0.4 4 65103 95 97 0 0 0 00:01:28 5 10.1.0.6 4 65104 95 97 0 0 0 00:01:28 5 Total number of neighbors 4 --------------------------------------------------- SPINE2 check BGP Sessions --------------------------------------------------- BGP router identifier 10.2.0.1, local AS number 65002 RIB entries 8, using 896 bytes of memory Peers 4, using 18 KiB of memory Neighbor V AS MsgRcvd MsgSent TblVer InQ OutQ Up/Down State/PfxRcd 10.2.0.0 4 65101 93 96 0 0 0 00:01:29 2 10.2.0.2 4 65102 94 100 0 0 0 00:01:32 1 10.2.0.4 4 65103 94 100 0 0 0 00:01:32 1 10.2.0.6 4 65104 94 100 0 0 0 00:01:32 1 Total number of neighbors 4 --------------------------------------------------- Now if all is ok, we'll do some ping tests --------------------------------------------------- --------------------------------------------------- host1 ping its gateway --------------------------------------------------- PING 192.168.1.1 (192.168.1.1) 56(84) bytes of data. 64 bytes from 192.168.1.1: icmp_seq=1 ttl=64 time=17.6 ms 64 bytes from 192.168.1.1: icmp_seq=2 ttl=64 time=9.14 ms --- 192.168.1.1 ping statistics --- 2 packets transmitted, 2 received, 0% packet loss, time 1000ms rtt min/avg/max/mdev = 9.145/13.390/17.636/4.247 ms --------------------------------------------------- host2 ping its gateway --------------------------------------------------- PING 192.168.2.1 (192.168.2.1) 56(84) bytes of data. 64 bytes from 192.168.2.1: icmp_seq=1 ttl=64 time=23.6 ms 64 bytes from 192.168.2.1: icmp_seq=2 ttl=64 time=6.21 ms --- 192.168.2.1 ping statistics --- 2 packets transmitted, 2 received, 0% packet loss, time 1001ms rtt min/avg/max/mdev = 6.218/14.950/23.683/8.733 ms --------------------------------------------------- host3 ping its gateway --------------------------------------------------- PING 192.168.3.1 (192.168.3.1) 56(84) bytes of data. 64 bytes from 192.168.3.1: icmp_seq=1 ttl=64 time=18.8 ms 64 bytes from 192.168.3.1: icmp_seq=2 ttl=64 time=12.7 ms --- 192.168.3.1 ping statistics --- 2 packets transmitted, 2 received, 0% packet loss, time 1001ms rtt min/avg/max/mdev = 12.716/15.764/18.812/3.048 ms --------------------------------------------------- host4 ping its gateway --------------------------------------------------- PING 192.168.4.1 (192.168.4.1) 56(84) bytes of data. 64 bytes from 192.168.4.1: icmp_seq=1 ttl=64 time=17.7 ms 64 bytes from 192.168.4.1: icmp_seq=2 ttl=64 time=4.46 ms --- 192.168.4.1 ping statistics --- 2 packets transmitted, 2 received, 0% packet loss, time 1001ms rtt min/avg/max/mdev = 4.464/11.107/17.750/6.643 ms --------------------------------------------------- host1 sends 3 ping the 3 other hosts --------------------------------------------------- PING 192.168.2.2 (192.168.2.2) 56(84) bytes of data. 64 bytes from 192.168.2.2: icmp_seq=1 ttl=61 time=16.0 ms 64 bytes from 192.168.2.2: icmp_seq=2 ttl=61 time=19.5 ms 64 bytes from 192.168.2.2: icmp_seq=3 ttl=61 time=16.1 ms --- 192.168.2.2 ping statistics --- 3 packets transmitted, 3 received, 0% packet loss, time 2002ms rtt min/avg/max/mdev = 16.049/17.253/19.532/1.615 ms PING 192.168.3.2 (192.168.3.2) 56(84) bytes of data. 64 bytes from 192.168.3.2: icmp_seq=1 ttl=61 time=15.5 ms 64 bytes from 192.168.3.2: icmp_seq=2 ttl=61 time=14.3 ms 64 bytes from 192.168.3.2: icmp_seq=3 ttl=61 time=16.9 ms --- 192.168.3.2 ping statistics --- 3 packets transmitted, 3 received, 0% packet loss, time 2002ms rtt min/avg/max/mdev = 14.312/15.592/16.939/1.083 ms PING 192.168.4.2 (192.168.4.2) 56(84) bytes of data. 64 bytes from 192.168.4.2: icmp_seq=1 ttl=61 time=16.0 ms 64 bytes from 192.168.4.2: icmp_seq=2 ttl=61 time=20.7 ms 64 bytes from 192.168.4.2: icmp_seq=3 ttl=61 time=16.8 ms --- 192.168.4.2 ping statistics --- 3 packets transmitted, 3 received, 0% packet loss, time 2002ms rtt min/avg/max/mdev = 16.048/17.871/20.739/2.058 ms --------------------------------------------------- host2 sends 3 ping the 3 other hosts --------------------------------------------------- PING 192.168.1.2 (192.168.1.2) 56(84) bytes of data. 64 bytes from 192.168.1.2: icmp_seq=1 ttl=61 time=16.2 ms 64 bytes from 192.168.1.2: icmp_seq=2 ttl=61 time=16.6 ms 64 bytes from 192.168.1.2: icmp_seq=3 ttl=61 time=16.9 ms --- 192.168.1.2 ping statistics --- 3 packets transmitted, 3 received, 0% packet loss, time 2002ms rtt min/avg/max/mdev = 16.222/16.583/16.924/0.286 ms PING 192.168.3.2 (192.168.3.2) 56(84) bytes of data. 64 bytes from 192.168.3.2: icmp_seq=1 ttl=61 time=16.6 ms 64 bytes from 192.168.3.2: icmp_seq=2 ttl=61 time=18.1 ms 64 bytes from 192.168.3.2: icmp_seq=3 ttl=61 time=26.8 ms --- 192.168.3.2 ping statistics --- 3 packets transmitted, 3 received, 0% packet loss, time 2002ms rtt min/avg/max/mdev = 16.620/20.549/26.887/4.524 ms PING 192.168.4.2 (192.168.4.2) 56(84) bytes of data. 64 bytes from 192.168.4.2: icmp_seq=1 ttl=61 time=33.8 ms 64 bytes from 192.168.4.2: icmp_seq=2 ttl=61 time=27.7 ms 64 bytes from 192.168.4.2: icmp_seq=3 ttl=61 time=20.2 ms --- 192.168.4.2 ping statistics --- 3 packets transmitted, 3 received, 0% packet loss, time 2002ms rtt min/avg/max/mdev = 20.216/27.259/33.818/5.566 ms --------------------------------------------------- host3 sends 3 ping the 3 other hosts --------------------------------------------------- PING 192.168.1.2 (192.168.1.2) 56(84) bytes of data. 64 bytes from 192.168.1.2: icmp_seq=1 ttl=61 time=14.5 ms 64 bytes from 192.168.1.2: icmp_seq=2 ttl=61 time=19.5 ms 64 bytes from 192.168.1.2: icmp_seq=3 ttl=61 time=15.5 ms --- 192.168.1.2 ping statistics --- 3 packets transmitted, 3 received, 0% packet loss, time 2002ms rtt min/avg/max/mdev = 14.535/16.549/19.541/2.162 ms PING 192.168.2.2 (192.168.2.2) 56(84) bytes of data. 64 bytes from 192.168.2.2: icmp_seq=1 ttl=61 time=16.4 ms 64 bytes from 192.168.2.2: icmp_seq=2 ttl=61 time=14.6 ms 64 bytes from 192.168.2.2: icmp_seq=3 ttl=61 time=18.2 ms --- 192.168.2.2 ping statistics --- 3 packets transmitted, 3 received, 0% packet loss, time 2002ms rtt min/avg/max/mdev = 14.643/16.458/18.278/1.491 ms PING 192.168.4.2 (192.168.4.2) 56(84) bytes of data. 64 bytes from 192.168.4.2: icmp_seq=1 ttl=61 time=15.3 ms 64 bytes from 192.168.4.2: icmp_seq=2 ttl=61 time=14.1 ms 64 bytes from 192.168.4.2: icmp_seq=3 ttl=61 time=13.1 ms --- 192.168.4.2 ping statistics --- 3 packets transmitted, 3 received, 0% packet loss, time 2001ms rtt min/avg/max/mdev = 13.171/14.234/15.334/0.883 ms --------------------------------------------------- host4 sends 3 ping the 3 other hosts --------------------------------------------------- PING 192.168.1.2 (192.168.1.2) 56(84) bytes of data. 64 bytes from 192.168.1.2: icmp_seq=1 ttl=61 time=21.9 ms 64 bytes from 192.168.1.2: icmp_seq=2 ttl=61 time=21.3 ms 64 bytes from 192.168.1.2: icmp_seq=3 ttl=61 time=14.6 ms --- 192.168.1.2 ping statistics --- 3 packets transmitted, 3 received, 0% packet loss, time 2001ms rtt min/avg/max/mdev = 14.619/19.284/21.932/3.310 ms PING 192.168.2.2 (192.168.2.2) 56(84) bytes of data. 64 bytes from 192.168.2.2: icmp_seq=1 ttl=61 time=14.8 ms 64 bytes from 192.168.2.2: icmp_seq=2 ttl=61 time=18.4 ms 64 bytes from 192.168.2.2: icmp_seq=3 ttl=61 time=14.1 ms --- 192.168.2.2 ping statistics --- 3 packets transmitted, 3 received, 0% packet loss, time 2002ms rtt min/avg/max/mdev = 14.148/15.786/18.409/1.879 ms PING 192.168.3.2 (192.168.3.2) 56(84) bytes of data. 64 bytes from 192.168.3.2: icmp_seq=1 ttl=61 time=16.2 ms 64 bytes from 192.168.3.2: icmp_seq=2 ttl=61 time=15.1 ms 64 bytes from 192.168.3.2: icmp_seq=3 ttl=61 time=18.9 ms --- 192.168.3.2 ping statistics --- 3 packets transmitted, 3 received, 0% packet loss, time 2001ms rtt min/avg/max/mdev = 15.117/16.788/18.962/1.612 ms --------------------------------------------------- If bot spine have all BGP sessions and all ping succeeded, all works well Congrats ! please check readme file to find some details ---------------------------------------------------