Difference between revisions of "VyosAristaCVX-VXLAN-lab"

| (One intermediate revision by the same user not shown) | |||

| Line 20: | Line 20: | ||

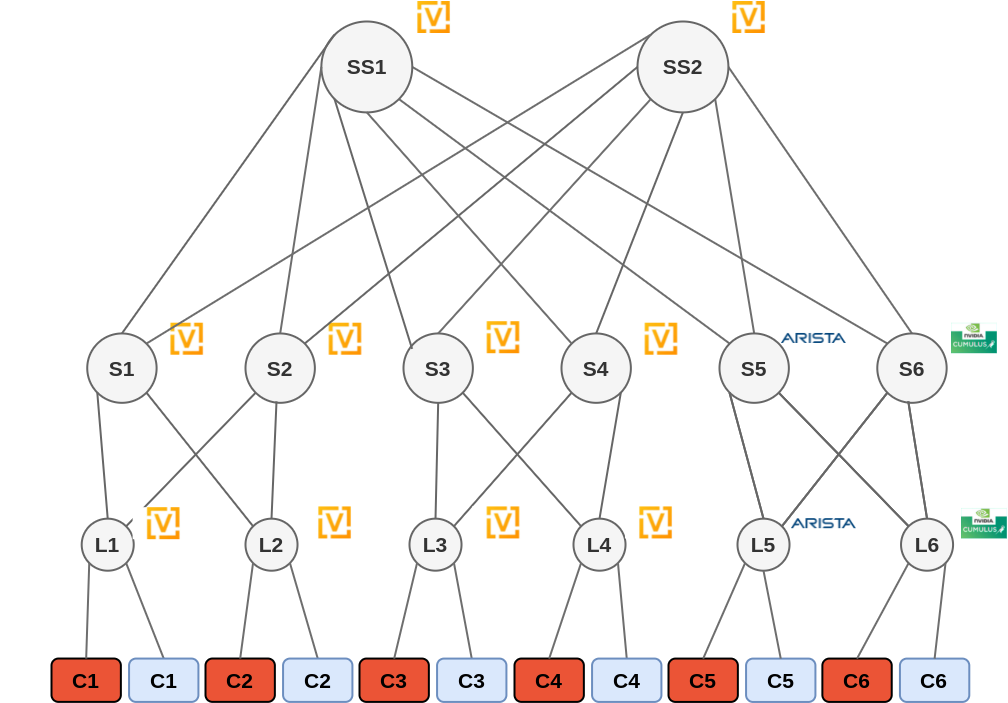

We'll build a 3-tiers fabric, with leaf/spine/superspine devices, VXLAN/EVPN running on all nodes. It gives the following setup: | We'll build a 3-tiers fabric, with leaf/spine/superspine devices, VXLAN/EVPN running on all nodes. It gives the following setup: | ||

| − | [[ | + | [[File:nesevo-Lab-Vyatta-Arista-CVX.png]] |

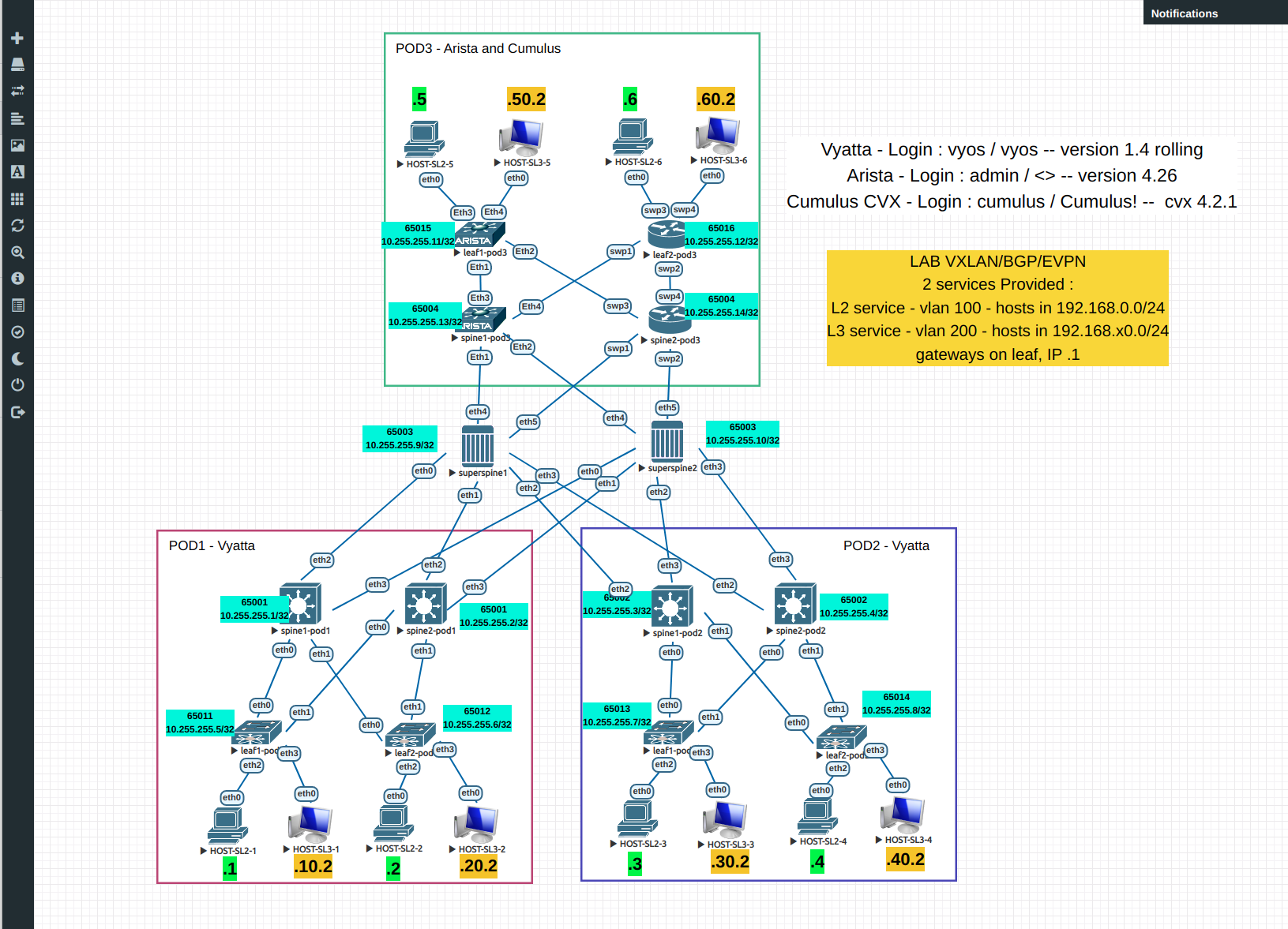

On EVE-NG, we add IP information, ASN, loopback IPs. All other information will be past after when dealing with technical details. | On EVE-NG, we add IP information, ASN, loopback IPs. All other information will be past after when dealing with technical details. | ||

| Line 26: | Line 26: | ||

So, it gives: | So, it gives: | ||

| − | [[ | + | [[File:2022-02_eveng-lab-vyatta-cum-arista.png]] |

==== <span class="mw-headline">VXLAN/BGP infos</span> ==== | ==== <span class="mw-headline">VXLAN/BGP infos</span> ==== | ||

| Line 54: | Line 54: | ||

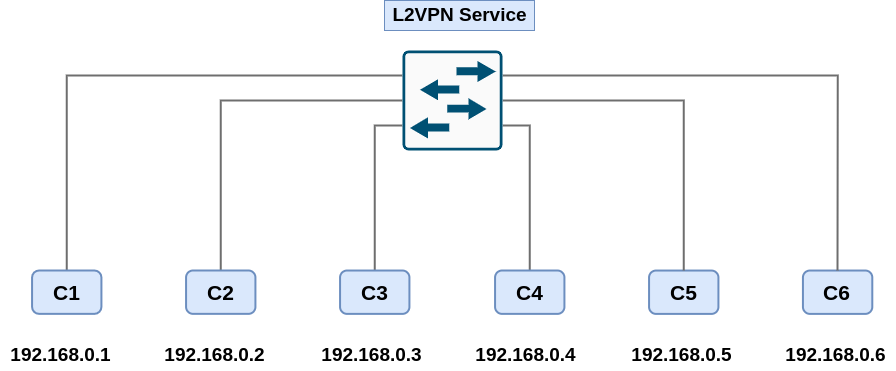

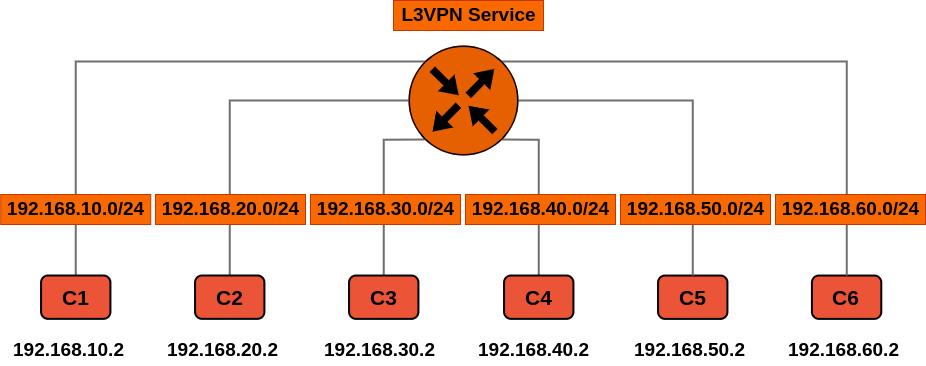

Below some diagrams showing the logic topologies : | Below some diagrams showing the logic topologies : | ||

| − | [[ | + | [[File:nesevo-Lab-Vyatta-Arista-CVX-l2vpn.png]] |

| − | [[ | + | [[File:nesevo-Lab-Vyatta-Arista-CVX-l3vpn.png]] |

| Line 66: | Line 66: | ||

To be noted that the configurations are not "ready-to-prod " and need to be optimized (adding bfd, bgp optimization, etc.). Some typos can subsist. Whatever, the lab works and gives the expected results :) | To be noted that the configurations are not "ready-to-prod " and need to be optimized (adding bfd, bgp optimization, etc.). Some typos can subsist. Whatever, the lab works and gives the expected results :) | ||

| − | Link to devices configurations : | + | Link to devices configurations : [https://wiki.nesevo.com/images/3/32/02.lab-vyos-eos-cvx_v2.zip lab-vyos-eos-cvx_v2.zip] |

=== Lab details === | === Lab details === | ||

Latest revision as of 13:42, 5 April 2022

Introduction

More and more, EVPN is making its place in Datacenter field. Mainstream vendors integrate its support, with VXLAN or MPLS for data plane + BGP for control plane. In previous labs, we were able to observe that interoperability was quite good between vendors. Nevertheless, even we've already tested CumulusVX, there are also other open sources NOS which can support EVPN.

Today we'll test VyOS in it's latest rolling version, which handles the support of EVPN.

The targets of this lab are to :

- create a VXLAN Fabric with VyOS nodes

- provide a L2VPN and a L3VPN to client nodes within the fabric

- integrate some other vendors node into the fabric, here Arista and Cumulus VX

- test that all works as expected

Lab setup

To reach those goals, we'll use the EVE-NG tool in community edition (https://www.eve-ng.net/index.php/download/#DL-COMM) and use the following NOS version :

- Vyatta VyOS 1.4 rolling <-- free to download https://vyos.net/get/nightly-builds/

- Arista vEOS 4.26.0F lab edition <-- need a support account

- Nvidia Cumulus VX 4.2.1 lab edition <-- free to download https://www.nvidia.com/en-us/networking/ethernet-switching/cumulus-vx/download/

We'll build a 3-tiers fabric, with leaf/spine/superspine devices, VXLAN/EVPN running on all nodes. It gives the following setup:

On EVE-NG, we add IP information, ASN, loopback IPs. All other information will be past after when dealing with technical details.

So, it gives:

VXLAN/BGP infos

Note: in our lab, route-distinguisher is constructed on the model <loopback_ip>:<vlan_id>

| service | vlan id | route-target | VNI | Commentaires |

|---|---|---|---|---|

| L2 cross-DC | 100 | target:65000:100 | 100 | the 6 clients are in the same broadcast domain / subnet |

| L3VPN cros-DC | 200 | target:65000:200 | 200 | the 6 clients have their own subnets. Here we use only one vni because no ESI will be implemented. One leaf one subnet. |

Client devices are represented by computers on EVE-NG. IPs in green are using the L2VPN service (extended layer 2 between pods) and IPs in orange the L3VPN service (routing service).

Below some diagrams showing the logic topologies :

Below the link to the configurations to allow you to quickly reproduce the tab.

To be noted that the configurations are not "ready-to-prod " and need to be optimized (adding bfd, bgp optimization, etc.). Some typos can subsist. Whatever, the lab works and gives the expected results :)

Link to devices configurations : lab-vyos-eos-cvx_v2.zip

Lab details

Considering that the lab is up and running, we'll now come back to the architecture.

We have 3 kinds of network nodes :

- leaf switches, on edge, which provide L2 and L3 services.

- couple of spine switches which allow leaf from a same pod to exchange traffic. They are also connected to superspine to reach other pods

- superspine switches which interconnext the different spine switches from the different pods, thus allowing interpods traffic

All those network devices control plane can exchange routes' information within 2 layers of bgp : underlay and overlay.

The role of the "underlay" is to dynamically route the traffic between loopback interfaces from the different nodes. Loopback interfaces are used to mount BGP sessions for the underlay and are used as VTEP interfaces on leaf nodes. Generally, it's better to have 2 different Loopback for this usage (in case of anycast vtep setup for example), but some vendors don't allow to 2 loopback interfaces in the same routing context. Whatever, in our setup, having only one loopback is fine.

Here, the underlay is done in eBGP, ipv4 unicast family only + route-map to only redistribute loopback interfaces IP.

- Each node mount a session to the directly connected peers.

- Each leaf node has a dedicated ASN.

- Each couple of spine/superspine has a ASN for two, to follow the best practices to optimize the routing table (ECMP).

The role of the "overlay" is to exchange EVPN routes type 2,3,5 here (type 1/4 routes seem to not be managed by VyOS at the moment, TBC).

- eBGP multihop sessions are mounted to directly neighbor loopback IPs.

- policies/options are set to not change the original nexthop when propagating routes

- for L2 services we only allow the redistribution of type 2 (macip) and type 3 (inclusive mcast for BUM management) routes

- for L3 services we only allow the redistribution of type 5 (prefix) routes

- regarding the setup, to enhance the scalability, it could be nice to use dedicated nodes (VM or phys) to take the role of route-servers. Here, we could connect them to superspine. Thus, spine/superspine would only do underlay and could not be a bottleneck for the control plane scalability

Moreover, regarding the lab setup, no bgp pic / bfd / VMTO / other option are implemented to optimize the convergence / traffic delivery. The goal was to verify that VyOS is compatible in a basic but functionnal VXLAN setup.

Now, we'll take a look on the different output from the 2 vendors leaf / spine / superspine to verify that all works as expected.

We verify that underlay works well. All is fine if:

- we have an eBGP session per directly connected peer

- we receive the same number of prefixes per peer type:

- a leef has to receive the same number of prefixes from its spine neighbors

- spine neigbors have to receive the same amount of prefixes from superspine, and reciprocally

- all VTEP IPs have to be known into the GRT (global routing table / default routing instance)

On VyOS

Below we can see that we receive 17 prefixes from both neighbors and that we know all loopback IPs from the setup (13 remote, 1 local). We have a bit too much routes, because on Cumulus nodes the filters that I have configured seem misconstructed.

To be noted that spine will announce all the Loopback IPs except the one from it's twin, since they are not directly connected and superspine respect as loop protection constraint (don't reflect routes originated by an ASN "X" to a peer from the same ASN "X").

vyos@leaf1-pod1:~$ show ip bgp summary established

IPv4 Unicast Summary (VRF default):

BGP router identifier 10.255.255.5, local AS number 65011 vrf-id 0

BGP table version 20

RIB entries 37, using 6808 bytes of memory

Peers 2, using 1446 KiB of memory

Peer groups 2, using 128 bytes of memory

Neighbor V AS MsgRcvd MsgSent TblVer InQ OutQ Up/Down State/PfxRcd PfxSnt Desc

10.254.254.9 4 65001 29993 29988 0 0 0 02w6d19h 17 19 N/A

10.254.254.11 4 65001 17218 17220 0 0 0 01w4d22h 17 19 N/A

Displayed neighbors 2

Total number of neighbors 2

vyos@leaf1-pod1:~$ show ip route | match /32

B>* 10.255.255.1/32 [20/0] via 10.254.254.9, eth0, weight 1, 02w6d19h

B>* 10.255.255.2/32 [20/0] via 10.254.254.11, eth1, weight 1, 01w4d22h

B>* 10.255.255.3/32 [20/0] via 10.254.254.9, eth0, weight 1, 01w4d18h

B>* 10.255.255.4/32 [20/0] via 10.254.254.9, eth0, weight 1, 01w4d18h

C>* 10.255.255.5/32 is directly connected, dum0, 02w6d19h

B>* 10.255.255.6/32 [20/0] via 10.254.254.9, eth0, weight 1, 01w4d22h

B>* 10.255.255.7/32 [20/0] via 10.254.254.9, eth0, weight 1, 01w4d18h

B>* 10.255.255.8/32 [20/0] via 10.254.254.9, eth0, weight 1, 01w4d18h

B>* 10.255.255.9/32 [20/0] via 10.254.254.9, eth0, weight 1, 01w4d18h

B>* 10.255.255.10/32 [20/0] via 10.254.254.9, eth0, weight 1, 01w4d18h

B>* 10.255.255.11/32 [20/0] via 10.254.254.9, eth0, weight 1, 01w2d01h

B>* 10.255.255.12/32 [20/0] via 10.254.254.9, eth0, weight 1, 01w2d00h

B>* 10.255.255.13/32 [20/0] via 10.254.254.9, eth0, weight 1, 01w2d01h

B>* 10.255.255.14/32 [20/0] via 10.254.254.9, eth0, weight 1, 01w2d00h

On Arista

We verify that we receive prefix + same leaf / superspine / remote pods spine IPs (we note there misses some filters on spine since we receive /31 interco routes):

leaf1-pod3#show ip bgp neighbors 10.254.254.43 received-routes

BGP routing table information for VRF default

Router identifier 10.255.255.11, local AS number 65015

Route status codes: s - suppressed, * - valid, > - active, E - ECMP head, e - ECMP

S - Stale, c - Contributing to ECMP, b - backup, L - labeled-unicast

% - Pending BGP convergence

Origin codes: i - IGP, e - EGP, ? - incomplete

RPKI Origin Validation codes: V - valid, I - invalid, U - unknown

AS Path Attributes: Or-ID - Originator ID, C-LST - Cluster List, LL Nexthop - Link Local Nexthop

Network Next Hop Metric AIGP LocPref Weight Path

10.254.254.36/31 10.254.254.43 0 - - - 65004 ?

10.254.254.38/31 10.254.254.43 0 - - - 65004 ?

10.254.254.42/31 10.254.254.43 0 - - - 65004 ?

10.254.254.44/31 10.254.254.43 - - - - 65004 65016 ?

10.254.254.46/31 10.254.254.43 0 - - - 65004 ?

* 10.255.255.1/32 10.254.254.43 - - - - 65004 65003 65001 ?

* 10.255.255.2/32 10.254.254.43 - - - - 65004 65003 65001 ?

* 10.255.255.3/32 10.254.254.43 - - - - 65004 65003 65002 ?

* 10.255.255.4/32 10.254.254.43 - - - - 65004 65003 65002 ?

* 10.255.255.5/32 10.254.254.43 - - - - 65004 65003 65001 65011 ?

* 10.255.255.6/32 10.254.254.43 - - - - 65004 65003 65001 65012 ?

* 10.255.255.7/32 10.254.254.43 - - - - 65004 65003 65002 65013 ?

* 10.255.255.8/32 10.254.254.43 - - - - 65004 65003 65002 65014 ?

* 10.255.255.9/32 10.254.254.43 - - - - 65004 65003 ?

* 10.255.255.10/32 10.254.254.43 - - - - 65004 65003 ?

* 10.255.255.12/32 10.254.254.43 - - - - 65004 65016 ?

* > 10.255.255.14/32 10.254.254.43 0 - - - 65004 ?

leaf1-pod3#show ip bgp neighbors 10.254.254.41 received-routes

BGP routing table information for VRF default

Router identifier 10.255.255.11, local AS number 65015

Route status codes: s - suppressed, * - valid, > - active, E - ECMP head, e - ECMP

S - Stale, c - Contributing to ECMP, b - backup, L - labeled-unicast

% - Pending BGP convergence

Origin codes: i - IGP, e - EGP, ? - incomplete

RPKI Origin Validation codes: V - valid, I - invalid, U - unknown

AS Path Attributes: Or-ID - Originator ID, C-LST - Cluster List, LL Nexthop - Link Local Nexthop

Network Next Hop Metric AIGP LocPref Weight Path

* > 10.255.255.1/32 10.254.254.41 - - - - 65004 65003 65001 ?

* > 10.255.255.2/32 10.254.254.41 - - - - 65004 65003 65001 ?

* > 10.255.255.3/32 10.254.254.41 - - - - 65004 65003 65002 ?

* > 10.255.255.4/32 10.254.254.41 - - - - 65004 65003 65002 ?

* > 10.255.255.5/32 10.254.254.41 - - - - 65004 65003 65001 65011 ?

* > 10.255.255.6/32 10.254.254.41 - - - - 65004 65003 65001 65012 ?

* > 10.255.255.7/32 10.254.254.41 - - - - 65004 65003 65002 65013 ?

* > 10.255.255.8/32 10.254.254.41 - - - - 65004 65003 65002 65014 ?

* > 10.255.255.9/32 10.254.254.41 - - - - 65004 65003 ?

* > 10.255.255.10/32 10.254.254.41 - - - - 65004 65003 ?

* > 10.255.255.12/32 10.254.254.41 - - - - 65004 65016 ?

* > 10.255.255.13/32 10.254.254.41 - - - - 65004 i

On Cumulus

cumulus@cumulus:mgmt:~$ net show bgp summary

show bgp ipv4 unicast summary

=============================

BGP router identifier 10.255.255.12, local AS number 65016 vrf-id 0

BGP table version 103

RIB entries 37, using 7104 bytes of memory

Peers 2, using 43 KiB of memory

Neighbor V AS MsgRcvd MsgSent TblVer InQ OutQ Up/Down State/PfxRcd

10.254.254.45 4 65004 306764 261331 0 0 0 01:39:02 12

spine1-pod3(10.254.254.47) 4 65004 261602 261664 0 0 0 01w2d02h 16

Total number of neighbors 2

[...]

cumulus@cumulus:mgmt:~$ net show route | grep /32

B>* 10.255.255.1/32 [20/0] via 10.254.254.45, swp1, weight 1, 01:39:03

B>* 10.255.255.2/32 [20/0] via 10.254.254.45, swp1, weight 1, 01:39:03

B>* 10.255.255.3/32 [20/0] via 10.254.254.45, swp1, weight 1, 01:39:03

B>* 10.255.255.4/32 [20/0] via 10.254.254.45, swp1, weight 1, 01:39:03

B>* 10.255.255.5/32 [20/0] via 10.254.254.45, swp1, weight 1, 01:39:03

B>* 10.255.255.6/32 [20/0] via 10.254.254.45, swp1, weight 1, 01:39:03

B>* 10.255.255.7/32 [20/0] via 10.254.254.45, swp1, weight 1, 01:39:03

B>* 10.255.255.8/32 [20/0] via 10.254.254.45, swp1, weight 1, 01:39:03

B>* 10.255.255.9/32 [20/0] via 10.254.254.45, swp1, weight 1, 01:39:03

B>* 10.255.255.10/32 [20/0] via 10.254.254.45, swp1, weight 1, 01:39:03

B>* 10.255.255.11/32 [20/0] via 10.254.254.45, swp1, weight 1, 01:39:03

C>* 10.255.255.12/32 is directly connected, lo, 01w2d02h

B>* 10.255.255.13/32 [20/0] via 10.254.254.45, swp1, weight 1, 01:39:03

B>* 10.255.255.14/32 [20/0] via 10.254.254.47, swp2, weight 1, 01w2d02h

We can also verify that routing is ok by pinging remote loopback IPs from local loopback + check eBGP EVPN session status:

# ping example from VyOS leaf

vyos@leaf1-pod1:~$ ping 10.255.255.12 source-address 10.255.255.5

PING 10.255.255.12 (10.255.255.12) from 10.255.255.5 : 56(84) bytes of data.

64 bytes from 10.255.255.12: icmp_seq=1 ttl=61 time=2.60 ms

64 bytes from 10.255.255.12: icmp_seq=2 ttl=61 time=2.54 ms

64 bytes from 10.255.255.12: icmp_seq=3 ttl=61 time=2.44 ms

# check of EVPN sessions on the different vendors

# vyos

vyos@leaf1-pod1:~$ show bgp l2vpn evpn summary

BGP router identifier 10.255.255.5, local AS number 65011 vrf-id 0

BGP table version 0

RIB entries 31, using 5704 bytes of memory

Peers 2, using 1446 KiB of memory

Peer groups 2, using 128 bytes of memory

Neighbor V AS MsgRcvd MsgSent TblVer InQ OutQ Up/Down State/PfxRcd PfxSnt Desc

10.255.255.1 4 65001 30205 30208 0 0 0 02w6d21h 13 13 N/A

10.255.255.2 4 65001 17392 17429 0 0 0 01w5d00h 11 13 N/A

# Arista

leaf1-pod3#show bgp evpn summary

BGP summary information for VRF default

Router identifier 10.255.255.11, local AS number 65015

Neighbor Status Codes: m - Under maintenance

Description Neighbor V AS MsgRcvd MsgSent InQ OutQ Up/Down State PfxRcd PfxAcc

OVL_spine1-pod3 10.255.255.13 4 65004 15608 15503 0 0 00:03:47 Estab 17 17

OVL_spine2-pod3 10.255.255.14 4 65004 262780 308558 0 0 9d02h Estab 13 13

# Cumulus

cumulus@cumulus:mgmt:~$ net show bgp l2vpn evpn summary

BGP router identifier 10.255.255.12, local AS number 65016 vrf-id 0

BGP table version 0

RIB entries 29, using 5568 bytes of memory

Peers 2, using 43 KiB of memory

Neighbor V AS MsgRcvd MsgSent TblVer InQ OutQ Up/Down State/PfxRcd

10.255.255.13 4 65004 307243 261693 0 0 0 00:04:25 14

spine1-pod3(10.255.255.14) 4 65004 262001 262025 0 0 0 01w2d02h 10

On EVPN, we should receive quite the same number of prefixes from both spine, but looks like there differences of best path election + redistribution between vendors. Perhaps something to dig. The most important point is to verify that the FIB for the services are consistent.

L2 service implementation

On that topic, we'll firstly focus on the Layer 2 service. To be sure that all works as expected we have to check that:

- mac-address-table are consistent on the whole fabric. In traditionnal L2 setup, switch FIBs depend of the traffic passing through them and a mac @ can be see "everywhere" if it does a BUM on the network. In VXLAN, in a mac-vrf topology without filer, even a host doesn't send BUM, the mac address is propagated on the whole fabric (within BGP update). But, as it is on traditional L2 topology, you have to be sure that mac @ table aging time are consistent on all devices, with an aging time superior to ARP timer on hosts.

- routes type 2 are visible on each leaf nodes for a same vni, routes type 3 to reach remote VTEP have to be learned

- a common route-target for the VRF routes need to be used/set within policies. In effect, there are differences between vendors on route "tagging" (features to auto generate route-target, "hard coded" alogos, etc.). Here, on VyOS, we got to add adminstrative extended communities within route-map for the L2 VNI. We'll show it below

- all fabric connections (interconnections between network nodes) have the sufficient "IP MTU". Please keep in mind that VXLAN overhead has a size of 50 bytes. So, to be sure that Jumbo traffic for host is ok, configure it at least at 9050 Bytes. I would advise to push it at 9100 Bytes in case of VXLAN usage on client HOSTS for Virtualized setup on servers.

Check FDB on leaf switch + debug type 2 routes' propagation for leaf1 pod1

##############

# VyOS

##############

vyos@leaf1-pod1:~$ show bridge br100 fdb

50:01:00:01:00:02 dev eth2 vlan 1 master br100 permanent

50:01:00:01:00:02 dev eth2 master br100 permanent

33:33:00:00:00:01 dev eth2 self permanent

33:33:00:00:00:02 dev eth2 self permanent

01:00:5e:00:00:01 dev eth2 self permanent

01:80:c2:00:00:0e dev eth2 self permanent

01:80:c2:00:00:03 dev eth2 self permanent

01:80:c2:00:00:00 dev eth2 self permanent

33:33:00:00:00:01 dev br100 self permanent

33:33:00:00:00:02 dev br100 self permanent

33:33:ff:01:00:02 dev br100 self permanent

01:00:5e:00:00:6a dev br100 self permanent

33:33:00:00:00:6a dev br100 self permanent

01:00:5e:00:00:01 dev br100 self permanent

33:33:ff:00:00:00 dev br100 self permanent

00:50:79:66:68:15 dev vxlan100 vlan 1 extern_learn master br100

00:50:79:66:68:15 dev vxlan100 extern_learn master br100

8a:d0:00:16:1c:17 dev vxlan100 vlan 1 master br100 permanent

8a:d0:00:16:1c:17 dev vxlan100 master br100 permanent

00:00:00:00:00:00 dev vxlan100 dst 10.255.255.6 self permanent

00:00:00:00:00:00 dev vxlan100 dst 10.255.255.11 self permanent

00:00:00:00:00:00 dev vxlan100 dst 10.255.255.12 self permanent

00:00:00:00:00:00 dev vxlan100 dst 10.255.255.7 self permanent

00:00:00:00:00:00 dev vxlan100 dst 10.255.255.8 self permanent

00:50:79:66:68:15 dev vxlan100 dst 10.255.255.12 self extern_learn

# and to focus on remote nodes

vyos@leaf1-pod1:~$ show bridge br100 fdb | grep "self extern_learn"

00:50:79:66:68:15 dev vxlan100 dst 10.255.255.12 self extern_learn

00:50:79:66:68:13 dev vxlan100 dst 10.255.255.11 self extern_learn

00:50:79:66:68:19 dev vxlan100 dst 10.255.255.8 self extern_learn

00:50:79:66:68:0c dev vxlan100 dst 10.255.255.6 self extern_learn

00:50:79:66:68:17 dev vxlan100 dst 10.255.255.7 self extern_learn

# or on local clients

vyos@leaf1-pod1:~$ show bridge br100 fdb | grep eth | grep -v perm

00:50:79:66:68:0b dev eth2 master br100

# Now if we want to focus on route type 3 learned

Displayed 7 prefixes (12 paths) (of requested type)

vyos@leaf1-pod1:~$ show bgp l2vpn evpn route type 3 | grep Dis

Route Distinguisher: 10.255.255.5:3

Route Distinguisher: 10.255.255.6:3

Route Distinguisher: 10.255.255.7:2

Route Distinguisher: 10.255.255.8:2

Route Distinguisher: 10.255.255.11:100

Route Distinguisher: 10.255.255.12:100

# we notice that RDs for VyOS nodes don't respect the logic of <Loopback:vni>. It s because for the moment we can't set it by vni (or I didn't find how to do that)

##############

# Arista

##############

# we check the fdb. Remote host are behind the port Vx1

leaf1-pod3#show mac address-table vlan 100

Mac Address Table

------------------------------------------------------------------

Vlan Mac Address Type Ports Moves Last Move

---- ----------- ---- ----- ----- ---------

100 0050.7966.680c DYNAMIC Vx1 1 0:00:38 ago

100 0050.7966.6813 DYNAMIC Et3 1 0:14:08 ago

100 0050.7966.6815 DYNAMIC Vx1 1 9 days, 2:36:39 ago

100 0050.7966.6817 DYNAMIC Vx1 1 0:00:36 ago

100 0050.7966.6819 DYNAMIC Vx1 1 0:00:35 ago

Total Mac Addresses for this criterion: 5

# we check that all VTEP are ready for BUM

leaf1-pod3#show bgp evpn route-type imet | i >

Route status codes: s - suppressed, * - valid, > - active, E - ECMP head, e - ECMP

* >Ec RD: 10.255.255.5:3 imet 10.255.255.5

* >Ec RD: 10.255.255.6:3 imet 10.255.255.6

* >Ec RD: 10.255.255.7:2 imet 10.255.255.7

* >Ec RD: 10.255.255.8:2 imet 10.255.255.8

* > RD: 10.255.255.11:100 imet 10.255.255.11

* >Ec RD: 10.255.255.12:100 imet 10.255.255.12

[...]

leaf1-pod3#show vxlan vtep detail

Remote VTEPS for Vxlan1:

VTEP Learned Via MAC Address Learning Tunnel Type(s)

------------------ ------------------ -------------------------- --------------

10.255.255.5 control plane control plane flood, unicast

10.255.255.6 control plane control plane flood, unicast

10.255.255.7 control plane control plane flood, unicast

10.255.255.8 control plane control plane flood, unicast

10.255.255.12 control plane control plane flood, unicast

##############

# Cumulus

##############

# we check the fdb. Remote host are behind the port Vx1

cumulus@cumulus:mgmt:~$ net show bridge macs vlan 100

VLAN Master Interface MAC TunnelDest State Flags LastSeen

---- ------ --------- ----------------- ---------- --------- ------------ ----------------

100 bridge bridge 50:01:00:11:00:03 permanent 9 days, 02:47:42

100 bridge swp3 00:50:79:66:68:15 00:00:06

100 bridge vni100 00:50:79:66:68:0c extern_learn 00:00:40

100 bridge vni100 00:50:79:66:68:13 extern_learn 00:21:01

100 bridge vni100 00:50:79:66:68:17 extern_learn 00:00:38

100 bridge vni100 00:50:79:66:68:19 extern_learn 00:00:36

# --> here we see that it misses the @mac from the host on leaf1 pod1.

# we check the evpn routes on the Cumulus node and we see that from leaf1 pod1 vni 100 we have only those routes

Route Distinguisher: 10.255.255.5:3

* [3]:[0]:[32]:[10.255.255.5]

10.255.255.5 0 65004 65003 65001 65011 i

RT:65000:100 RT:65011:100 ET:8

* [3]:[0]:[32]:[10.255.255.5]

10.255.255.5 0 65004 65003 65001 65011 i

RT:65000:100 RT:65011:100 ET:8

*> [3]:[0]:[32]:[10.255.255.5]

10.255.255.5 0 65004 65003 65001 65011 i

RT:65000:100 RT:65011:100 ET:8

# --> something is wrong on the remote leaf.

# on the leaf we check the policy for the EVPN routes export

route-map RM-OUT-VNI {

rule 1 {

action permit

match {

evpn {

route-type multicast

vni 100

}

}

set {

extcommunity {

rt 65000:100

}

}

}

rule 2 {

action permit

match {

evpn {

route-type prefix

vni 100

}

}

set {

extcommunity {

rt 65000:100

}

}

}

rule 3 {

action permit

match {

evpn {

vni 200

}

}

set {

extcommunity {

rt 65000:200

}

# we notice that a typo in rule numbering removed the export of macip routes for vni 100.

# we correct that :

route-map RM-OUT-VNI {

rule 1 {

action permit

match {

evpn {

route-type multicast

vni 100

}

}

set {

extcommunity {

rt 65000:100

}

}

}

rule 2 {

action permit

match {

evpn {

route-type macip

vni 100

}

}

set {

extcommunity {

rt 65000:100

}

}

}

rule 3 {

action permit

match {

evpn {

route-type prefix

vni 200

}

}

set {

extcommunity {

rt 65000:200

}

# once corrected we check the fdb on the cumulus node + evpn routes

cumulus@cumulus:mgmt:~$ net show bridge macs vlan 100

VLAN Master Interface MAC TunnelDest State Flags LastSeen

---- ------ --------- ----------------- ---------- --------- ------------ ----------------

100 bridge bridge 50:01:00:11:00:03 permanent 9 days, 02:51:48

100 bridge swp3 00:50:79:66:68:15 00:02:01

100 bridge vni100 00:50:79:66:68:0b extern_learn 00:00:26

100 bridge vni100 00:50:79:66:68:0c extern_learn 00:04:46

100 bridge vni100 00:50:79:66:68:13 extern_learn 00:25:08

100 bridge vni100 00:50:79:66:68:17 extern_learn 00:04:45

100 bridge vni100 00:50:79:66:68:19 extern_learn 00:04:43

Route Distinguisher: 10.255.255.5:3

* [2]:[0]:[48]:[00:50:79:66:68:0b]

10.255.255.5 0 65004 65003 65001 65011 i

RT:65000:100 RT:65011:100 ET:8

* [2]:[0]:[48]:[00:50:79:66:68:0b]

10.255.255.5 0 65004 65003 65001 65011 i

RT:65000:100 RT:65011:100 ET:8

*> [2]:[0]:[48]:[00:50:79:66:68:0b]

10.255.255.5 0 65004 65003 65001 65011 i

RT:65000:100 RT:65011:100 ET:8

* [3]:[0]:[32]:[10.255.255.5]

10.255.255.5 0 65004 65003 65001 65011 i

RT:65000:100 RT:65011:100 ET:8

* [3]:[0]:[32]:[10.255.255.5]

10.255.255.5 0 65004 65003 65001 65011 i

RT:65000:100 RT:65011:100 ET:8

*> [3]:[0]:[32]:[10.255.255.5]

10.255.255.5 0 65004 65003 65001 65011 i

RT:65000:100 RT:65011:100 ET:8

# we have now Type 2 routes, all is ok now and we learn the client mac 00:50:79:66:68:0b

# Nevertheless, the trafic was working between the client hosts, but trafic from Cumulus leaf client to VyOS leaf client was broadcasted to all leaf since the @mac was not advertised on the fabric

It could be interesting to check how each node is configured to deliver the service, but the article would be too long. So, to sum the steps to provide a L2 service:

- create a VLAN (on Arista) / bridge (VyOS and Cumulus) + associate it to the VNI/VXLAN instances/interface

- create the mac vrf + define the route-target into bgp (Arista + Cumulus) / update the route-maps + community list to allow the redistribution of the VNI routes (type 2 and type 3)

L3 service implementation

Now, we'll now look at the L3 service. On that kind of setup we need to implement / check:

- leaf IP subnets are well redistributed betweeb all leaf (here we are in a vrf "any to any" topology)

- we only redistribute routes for IP prefix. Routes type 2 (mac @) don't need to be redistributed between leaf

- that all clients can ping each other through the L3VPN

To achieve that, we need to implement analog configuration than for the L2 service, but with the following differences:

- create a VLAN (on Arista) / bridge (VyOS and Cumulus) + associate it to the VNI/VXLAN instances|interface

- create the L3 VRF + define the route-target into bgp (Arista + Cumulus + VyOS) + allow connected routes distribution (Arista / Cumulus) / update the route-maps + community list to allow the redistribution of the VNI routes (type 5) for VyOS / restrict the routes redistribution to type 5 routes into the VNI (Cumulus)

Now we check the routing table with common cli commands + into the EVPN RIB :

# check routes into the vrf

vyos@leaf1-pod1:~$ show ip route vrf VRF-L3-1

Codes: K - kernel route, C - connected, S - static, R - RIP,

O - OSPF, I - IS-IS, B - BGP, E - EIGRP, N - NHRP,

T - Table, v - VNC, V - VNC-Direct, A - Babel, F - PBR,

f - OpenFabric,

> - selected route, * - FIB route, q - queued, r - rejected, b - backup

t - trapped, o - offload failure

VRF VRF-L3-1:

K>* 0.0.0.0/0 [255/8192] unreachable (ICMP unreachable), 02w6d22h

C>* 192.168.10.0/24 is directly connected, br200, 02w6d22h

B>* 192.168.20.0/24 [20/0] via 10.255.255.6, br200 onlink, weight 1, 05:11:08

B>* 192.168.30.0/24 [20/0] via 10.255.255.7, br200 onlink, weight 1, 05:04:15

B>* 192.168.40.0/24 [20/0] via 10.255.255.8, br200 onlink, weight 1, 04:59:41

B>* 192.168.50.0/24 [20/0] via 10.255.255.11, br200 onlink, weight 1, 05:11:08

B>* 192.168.60.0/24 [20/0] via 10.255.255.12, br200 onlink, weight 1, 05:11:08

leaf1-pod3#show ip route vrf VRF-L3-1

VRF: VRF-L3-1

Codes: C - connected, S - static, K - kernel,

O - OSPF, IA - OSPF inter area, E1 - OSPF external type 1,

E2 - OSPF external type 2, N1 - OSPF NSSA external type 1,

N2 - OSPF NSSA external type2, B - BGP, B I - iBGP, B E - eBGP,

R - RIP, I L1 - IS-IS level 1, I L2 - IS-IS level 2,

O3 - OSPFv3, A B - BGP Aggregate, A O - OSPF Summary,

NG - Nexthop Group Static Route, V - VXLAN Control Service,

DH - DHCP client installed default route, M - Martian,

DP - Dynamic Policy Route, L - VRF Leaked,

G - gRIBI, RC - Route Cache Route

Gateway of last resort is not set

B E 192.168.10.0/24 [200/0] via VTEP 10.255.255.5 VNI 200 router-mac 12:9b:9b:d8:f6:86 local-interface Vxlan1

B E 192.168.20.0/24 [200/0] via VTEP 10.255.255.6 VNI 200 router-mac 50:01:00:02:00:03 local-interface Vxlan1

B E 192.168.30.0/24 [200/0] via VTEP 10.255.255.7 VNI 200 router-mac 50:01:00:09:00:03 local-interface Vxlan1

B E 192.168.40.0/24 [200/0] via VTEP 10.255.255.8 VNI 200 router-mac 50:01:00:0a:00:03 local-interface Vxlan1

C 192.168.50.0/24 is directly connected, Vlan200

B E 192.168.60.0/24 [200/0] via VTEP 10.255.255.12 VNI 200 router-mac 50:01:00:11:00:03 local-interface Vxlan1

cumulus@cumulus:mgmt:~$ net show route vrf VRF-L3-1

show ip route vrf VRF-L3-1

===========================

Codes: K - kernel route, C - connected, S - static, R - RIP,

O - OSPF, I - IS-IS, B - BGP, E - EIGRP, N - NHRP,

T - Table, v - VNC, V - VNC-Direct, A - Babel, D - SHARP,

F - PBR, f - OpenFabric,

> - selected route, * - FIB route, q - queued route, r - rejected route

VRF VRF-L3-1:

K>* 0.0.0.0/0 [255/8192] unreachable (ICMP unreachable), 01w2d03h

B>* 192.168.10.0/24 [20/0] via 10.255.255.5, vlan200 onlink, weight 1, 03:18:37

B>* 192.168.20.0/24 [20/0] via 10.255.255.6, vlan200 onlink, weight 1, 03:18:37

B>* 192.168.30.0/24 [20/0] via 10.255.255.7, vlan200 onlink, weight 1, 03:18:37

B>* 192.168.40.0/24 [20/0] via 10.255.255.8, vlan200 onlink, weight 1, 03:18:37

B>* 192.168.50.0/24 [20/0] via 10.255.255.11, vlan200 onlink, weight 1, 03:18:37

C>* 192.168.60.0/24 is directly connected, vlan200, 01w2d03h

# we can notice that the output are quite similar, except on Arista which indicates the VNI for remote targets, instead of referencing the local bridge / vlan200

# Now we check the routes for the VNI 200

# VyOS

vyos@leaf1-pod1:~$ show bgp l2vpn evpn route type prefix

BGP table version is 1, local router ID is 10.255.255.5

Status codes: s suppressed, d damped, h history, * valid, > best, i - internal

Origin codes: i - IGP, e - EGP, ? - incomplete

EVPN type-1 prefix: [1]:[EthTag]:[ESI]:[IPlen]:[VTEP-IP]

EVPN type-2 prefix: [2]:[EthTag]:[MAClen]:[MAC]:[IPlen]:[IP]

EVPN type-3 prefix: [3]:[EthTag]:[IPlen]:[OrigIP]

EVPN type-4 prefix: [4]:[ESI]:[IPlen]:[OrigIP]

EVPN type-5 prefix: [5]:[EthTag]:[IPlen]:[IP]

Network Next Hop Metric LocPrf Weight Path

Extended Community

Route Distinguisher: 10.255.255.5:200

*> [5]:[0]:[24]:[192.168.10.0]

10.255.255.5 0 32768 ?

ET:8 RT:65000:200 Rmac:12:9b:9b:d8:f6:86

Route Distinguisher: 10.255.255.6:200

*> [5]:[0]:[24]:[192.168.20.0]

10.255.255.6 0 65001 65012 ?

RT:65000:200 ET:8 Rmac:50:01:00:02:00:03

Route Distinguisher: 10.255.255.7:200

* [5]:[0]:[24]:[192.168.30.0]

10.255.255.7 0 65001 65003 65002 65013 ?

RT:65000:200 RT:65013:200 ET:8 Rmac:50:01:00:09:00:03

*> [5]:[0]:[24]:[192.168.30.0]

10.255.255.7 0 65001 65003 65002 65013 ?

RT:65000:200 RT:65013:200 ET:8 Rmac:50:01:00:09:00:03

Route Distinguisher: 10.255.255.8:200

*> [5]:[0]:[24]:[192.168.40.0]

10.255.255.8 0 65001 65003 65002 65014 ?

RT:65000:200 RT:65014:200 ET:8 Rmac:50:01:00:0a:00:03

* [5]:[0]:[24]:[192.168.40.0]

10.255.255.8 0 65001 65003 65002 65014 ?

RT:65000:200 RT:65014:200 ET:8 Rmac:50:01:00:0a:00:03

Route Distinguisher: 10.255.255.11:200

* [5]:[0]:[24]:[192.168.50.0]

10.255.255.11 0 65001 65003 65004 65015 i

RT:65000:200 ET:8 Rmac:50:01:00:cd:ff:68

*> [5]:[0]:[24]:[192.168.50.0]

10.255.255.11 0 65001 65003 65004 65015 i

RT:65000:200 ET:8 Rmac:50:01:00:cd:ff:68

Route Distinguisher: 10.255.255.12:10200

* [5]:[0]:[24]:[192.168.60.0]

10.255.255.12 0 65001 65003 65004 65016 i

RT:65000:200 ET:8 Rmac:50:01:00:11:00:03

*> [5]:[0]:[24]:[192.168.60.0]

10.255.255.12 0 65001 65003 65004 65016 i

RT:65000:200 ET:8 Rmac:50:01:00:11:00:03

# Arista

leaf1-pod3#show bgp evpn route ip-prefix ipv4

BGP routing table information for VRF default

Router identifier 10.255.255.11, local AS number 65015

Route status codes: s - suppressed, * - valid, > - active, E - ECMP head, e - ECMP

S - Stale, c - Contributing to ECMP, b - backup

% - Pending BGP convergence

Origin codes: i - IGP, e - EGP, ? - incomplete

AS Path Attributes: Or-ID - Originator ID, C-LST - Cluster List, LL Nexthop - Link Local Nexthop

Network Next Hop Metric LocPref Weight Path

* > RD: 10.255.255.5:200 ip-prefix 192.168.10.0/24

10.255.255.5 - 100 0 65004 65003 65001 65011 ?

* RD: 10.255.255.5:200 ip-prefix 192.168.10.0/24

10.255.255.5 - 100 0 65004 65003 65001 65011 ?

* > RD: 10.255.255.6:200 ip-prefix 192.168.20.0/24

10.255.255.6 - 100 0 65004 65003 65001 65012 ?

* RD: 10.255.255.6:200 ip-prefix 192.168.20.0/24

10.255.255.6 - 100 0 65004 65003 65001 65012 ?

* > RD: 10.255.255.7:200 ip-prefix 192.168.30.0/24

10.255.255.7 - 100 0 65004 65003 65002 65013 ?

* RD: 10.255.255.7:200 ip-prefix 192.168.30.0/24

10.255.255.7 - 100 0 65004 65003 65002 65013 ?

* > RD: 10.255.255.8:200 ip-prefix 192.168.40.0/24

10.255.255.8 - 100 0 65004 65003 65002 65014 ?

* RD: 10.255.255.8:200 ip-prefix 192.168.40.0/24

10.255.255.8 - 100 0 65004 65003 65002 65014 ?

* > RD: 10.255.255.11:200 ip-prefix 192.168.50.0/24

- - - 0 i

* > RD: 10.255.255.12:10200 ip-prefix 192.168.60.0/24

10.255.255.12 - 100 0 65004 65016 i

* RD: 10.255.255.12:10200 ip-prefix 192.168.60.0/24

10.255.255.12 - 100 0 65004 65016 i

# CVX

cumulus@cumulus:mgmt:~$ net show bgp l2vpn evpn route type prefix

BGP table version is 7, local router ID is 10.255.255.12

Status codes: s suppressed, d damped, h history, * valid, > best, i - internal

Origin codes: i - IGP, e - EGP, ? - incomplete

EVPN type-1 prefix: [1]:[ESI]:[EthTag]:[IPlen]:[VTEP-IP]

EVPN type-2 prefix: [2]:[EthTag]:[MAClen]:[MAC]:[IPlen]:[IP]

EVPN type-3 prefix: [3]:[EthTag]:[IPlen]:[OrigIP]

EVPN type-4 prefix: [4]:[ESI]:[IPlen]:[OrigIP]

EVPN type-5 prefix: [5]:[EthTag]:[IPlen]:[IP]

Network Next Hop Metric LocPrf Weight Path

Extended Community

Route Distinguisher: 10.255.255.5:200

* [5]:[0]:[24]:[192.168.10.0]

10.255.255.5 0 65004 65003 65001 65011 ?

RT:65000:200 ET:8 Rmac:12:9b:9b:d8:f6:86

*> [5]:[0]:[24]:[192.168.10.0]

10.255.255.5 0 65004 65003 65001 65011 ?

RT:65000:200 ET:8 Rmac:12:9b:9b:d8:f6:86

Route Distinguisher: 10.255.255.6:200

* [5]:[0]:[24]:[192.168.20.0]

10.255.255.6 0 65004 65003 65001 65012 ?

RT:65000:200 ET:8 Rmac:50:01:00:02:00:03

*> [5]:[0]:[24]:[192.168.20.0]

10.255.255.6 0 65004 65003 65001 65012 ?

RT:65000:200 ET:8 Rmac:50:01:00:02:00:03

Route Distinguisher: 10.255.255.7:200

* [5]:[0]:[24]:[192.168.30.0]

10.255.255.7 0 65004 65003 65002 65013 ?

RT:65000:200 RT:65013:200 ET:8 Rmac:50:01:00:09:00:03

*> [5]:[0]:[24]:[192.168.30.0]

10.255.255.7 0 65004 65003 65002 65013 ?

RT:65000:200 RT:65013:200 ET:8 Rmac:50:01:00:09:00:03

Route Distinguisher: 10.255.255.8:200

* [5]:[0]:[24]:[192.168.40.0]

10.255.255.8 0 65004 65003 65002 65014 ?

RT:65000:200 RT:65014:200 ET:8 Rmac:50:01:00:0a:00:03

*> [5]:[0]:[24]:[192.168.40.0]

10.255.255.8 0 65004 65003 65002 65014 ?

RT:65000:200 RT:65014:200 ET:8 Rmac:50:01:00:0a:00:03

Route Distinguisher: 10.255.255.11:200

* [5]:[0]:[24]:[192.168.50.0]

10.255.255.11 0 65004 65015 i

RT:65000:200 ET:8 Rmac:50:01:00:cd:ff:68

*> [5]:[0]:[24]:[192.168.50.0]

10.255.255.11 0 65004 65015 i

RT:65000:200 ET:8 Rmac:50:01:00:cd:ff:68

Route Distinguisher: 10.255.255.12:10200

*> [5]:[0]:[24]:[192.168.60.0]

10.255.255.12 0 32768 i

ET:8 RT:65000:200 Rmac:50:01:00:11:00:03

# Now we check with traceroute on the 3 clients connected on focused leaf switches

# client leaf VyOS

VPCS> ping 192.168.50.2

84 bytes from 192.168.50.2 icmp_seq=1 ttl=62 time=16.456 ms

84 bytes from 192.168.50.2 icmp_seq=2 ttl=62 time=11.852 ms

^C

VPCS> ping 192.168.60.2

84 bytes from 192.168.60.2 icmp_seq=1 ttl=62 time=5.554 ms

84 bytes from 192.168.60.2 icmp_seq=2 ttl=62 time=4.432 ms

^C

VPCS> trace 192.168.50.2

trace to 192.168.50.2, 8 hops max, press Ctrl+C to stop

1 192.168.10.1 0.499 ms 3.143 ms 1.432 ms

2 192.168.50.1 10.465 ms 12.512 ms 8.992 ms

3 *192.168.50.2 11.056 ms (ICMP type:3, code:3, Destination port unreachable)

VPCS> trace 192.168.60.2

trace to 192.168.60.2, 8 hops max, press Ctrl+C to stop

1 192.168.10.1 0.525 ms 0.312 ms 0.278 ms

2 192.168.60.1 4.949 ms 4.232 ms 4.022 ms

3 *192.168.60.2 4.167 ms (ICMP type:3, code:3, Destination port unreachable)

# client leaf Arista

VPCS> ping 192.168.10.2

84 bytes from 192.168.10.2 icmp_seq=1 ttl=62 time=10.914 ms

^C

VPCS> ping 192.168.60.2

84 bytes from 192.168.60.2 icmp_seq=1 ttl=62 time=11.755 ms

^C

VPCS> trace 192.168.10.2

trace to 192.168.10.2, 8 hops max, press Ctrl+C to stop

1 192.168.50.1 4.742 ms 4.123 ms 4.293 ms

2 192.168.10.1 12.550 ms 15.039 ms 9.411 ms

3 *192.168.10.2 8.939 ms (ICMP type:3, code:3, Destination port unreachable)

VPCS>

VPCS> trace 192.168.60.2

trace to 192.168.60.2, 8 hops max, press Ctrl+C to stop

1 192.168.50.1 3.073 ms 2.901 ms 2.585 ms

2 192.168.60.1 10.815 ms 11.493 ms 12.144 ms

3 *192.168.60.2 10.429 ms (ICMP type:3, code:3, Destination port unreachable)

# client leaf Cumulus

VPCS> ping 192.168.10.2

84 bytes from 192.168.10.2 icmp_seq=1 ttl=62 time=4.731 ms

^C

VPCS> ping 192.168.50.2

84 bytes from 192.168.50.2 icmp_seq=1 ttl=62 time=11.722 ms

^C

VPCS> trace 192.168.50.2

trace to 192.168.50.2, 8 hops max, press Ctrl+C to stop

1 192.168.60.1 0.487 ms 0.266 ms 0.248 ms

2 192.168.50.1 6.691 ms 6.281 ms 6.613 ms

3 *192.168.50.2 9.355 ms (ICMP type:3, code:3, Destination port unreachable)

VPCS>

VPCS> trace 192.168.10.2

trace to 192.168.10.2, 8 hops max, press Ctrl+C to stop

1 192.168.60.1 0.436 ms 0.315 ms 0.380 ms

2 192.168.10.1 4.535 ms 4.661 ms 3.994 ms

3 *192.168.10.2 4.292 ms (ICMP type:3, code:3, Destination port unreachable)

Conclusion of the lab

These first results are satisfying, it comforts the idea that VXLAN is a strong option to deliver L2/L3 services in datacenters, but not only. This kind of architecture is more and more chosen in campus designs, PRA, NFV solutions.

The support of this technology on more and more open source NOS are encouraging regarding the innovation. If we look at Vyatta project for example, whom OS can be compiled "brick per brick", we could imagine to create NFV CPEs providing VXLAN fabric extensions of some client VRFs. CPE could be connected to the central Fabric within encrypted tunnels (wireguard,ipsec) or through direct link over MacSec (this feature in on the Vyatta Roadmap).

Please contact us if you want to feed the topic / discuss the lab.

Cheers

Pierre L.